AI language models have gained some notoriety in the last year or so. The following passage was generated solely with GPT-3 when prompted to write a short passage on the subject of this blog.

“Most AI text generators rely on deep-learning algorithms and natural language processing to generate text. This means the system is trained on a large corpus of text and learns how to produce text by recognizing patterns in the language. The result is a text generator that can produce text that is at least somewhat readable, even if it is not perfect.

The level of competency of AI text generators varies, depending on the amount of data and complexity of the algorithms used. Some AI text generators are able to produce simple sentences with basic grammar. Others are able to generate more complex text that can be used for basic tasks like summarizing articles.

The accuracy of AI text generators is also increasing as they are trained on larger datasets, and more advanced algorithms are used. Such improvements have enabled AI text generators to be used for more complex tasks. For example, AI text generators can be used to generate marketing copy, product descriptions, and even news articles.

Overall, AI text generators are becoming increasingly competent and are proving to be useful tools for a variety of tasks. As the technology continues to improve, AI text generators are likely to become even more useful in the future.”

You can’t refute the impressiveness of modern AI language models, but of course, there are many debates surrounding them and their uses.

Here, we’ll investigate some of the strengths and weaknesses of AI language models.

The Strengths of AI Text Generation

Like other transformer models, GPT-3 is trained on a huge corpus of internet data, books, and other texts. It extracts meaning from text and learns how to reply to various prompts.

The core strengths of GPT-3 include:

- Scale: AI text generators can create a mountain of text in mere seconds and avoid plagiarism by effectively rewriting other content available on the subject. With GPT-3, you can convert all available information on a topic into one super-article.

- Flexibility: While AI text generators are often discussed for their text-to-text ability, they can also be used to extract named entities from text, a useful tool for databasing and research, summarizing articles, which is helpful in academia, translation, and even sentiment analysis.

- Cost: Generating text with AI is theoretically cheaper; however, when you factor in fact-checking and editing, this advantage does diminish somewhat.

All in all, “AI text generation” is somewhat of a misnomer, as models like GPT-3 are capable of a huge variety of tasks that can accelerate labor-intensive workflows. Texts might need to be summarized, translated, and critiqued, which AI can help accomplish in one seamless workflow, without the need for multiple tools.

While language models are best-known for their ability to output human-like text like that above, GPT-3 and the transformer language models of the future are certainly not limited to text-to-text functionality. They can generate code, equations, translations, or even perform no-code sentiment analysis and named entity recognition.

Further, with its API, GPT-3 can be ‘plugged in’ to many custom workflows. For example, one user created a self-service tool that could create a UI component with Figma Design, with accurate text, images, and logos. Another used GPT-3 to generate code for a machine learning model by merely describing the dataset and required output.

”ChatGPT”, a conversational variant of GPT-3, is already revolutionizing chatbots by providing human-like decision-making and communication.

All in all, GPT-3 can be used to build interfaces that use natural text as the input layer, and how this is applied is open-ended. When partnered with other technologies, you can instruct GPT-3 to control a huge range of parameters with natural language. Advanced language models look sure to facilitate advanced no-code workflows, where users simply instruct programs to do their bidding in plain words, whether that be printing a complex 3D object, conducting advanced calculations, or analyzing large quantities of text for data.

However, as ever, there are barriers to overcome before the true potential of AI language models is realized.

The Limitations of AI Text Models

Many of today’s most powerful language models draw much of their data from the biggest language resource on Earth – the internet. The internet is unquestionably huge, but there is a concern that AI models treat the internet as a panacea for human knowledge. While the internet is large and convenient, it’s not as universal as many assume – and its contents can lack objectivity.

Not only is the majority of the internet written in English – thus compromising the cross-cultural nuance of these AIs – but it’s also still not used by billions of people. Outside of social media, a small minority of individuals add data to the internet, and AIs largely depend on their views.

Factual Accuracy

AI language models like GPT-3 are essentially search engines that work to join the dots and fill the gaps between information. This sometimes leads to false fact-finding missions that create questionable outputs.

This mirrors human thought patterns. Do people always know what they’re talking about with absolute precise factual accuracy? Or do they use their own interpretation to fill in their knowledge gaps? Forging new connections between information is all well and good, as long as they’re factually competent.

Right now, GPT-3 and other text models sometimes lacks factual competence, which is something OpenAI is working on for GPT-4.

MIT writes, “What it seems to be good at is synthesizing text it has found elsewhere on the internet, making it a kind of vast, eclectic scrapbook created from millions and millions of snippets of text that it then glues together in weird and wonderful ways on demand. “

One person described this as, “GPT-3 often performs like a clever student who hasn’t done their reading trying to [censored] their way through an exam. Some well-known facts, some half-truths, and some straight lies, strung together in what first looks like a smooth narrative.”

An example

GPT-3 and other AI text generators can fall apart when prompted for factually-rich content as they try too hard to synthesize facts, which ultimately leads to factually incorrect information.

When one user asked GPT-3 “What birds drink blood?” the model replied:

“No known bird species regularly drinks blood, but some species have been documented to occasionally consume blood. Vampire finches, which are found in the Galapagos Islands, will peck at the skin of other birds to consume their blood. Other birds that have been known to occasionally drink blood include herons, hawks, and kites.”

This is correct, to an extent. The information about Vampire finches is legitimate and well-documented, but GPT-3 then moves on to describe herons, hawks, and kites as ‘occasionally drinking blood.’ There is no evidence of this – the closest evidence merely suggests that these birds gain some of their dietary requirement for water from their prey’s blood (as they’re carnivorous). GPT-3 makes an incorrect inference.

When tested on factual accuracy, MIT researchers deemed AI text generation is sometimes incapable of making advanced social/biological/physical judgments. For example, GPT-3 bizarrely concluded that grape juice was fatally poisonous.

This presents an issue if AI text generation isn’t used carefully. Even seemingly benign cases of factual inaccuracy could gradually erode internet knowledge bases. The more factually incorrect information is published to the internet, the worse the issue could become.

So, while AI text generation is extremely convincing, it doesn’t represent true objectivity right out of the box. This relates to the concept of the ground truth. We can’t assume AI’s objectivity.

The Issue of Bias

Most AI text generators are subject to some level of bias, which is typically arises from internet training data.

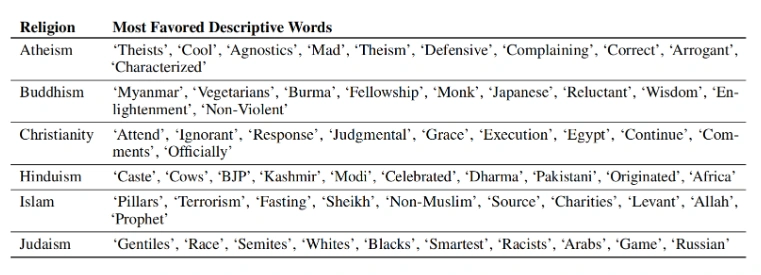

Aya Data has investigated this topic in various articles, and it’s definitely one of AI’s greatest challenges. Again, it highlights the problem of treating the internet as a ‘single source of truth’, and also illuminates the complexity of treating all internet data as similarly credible. Below we can see how language models associate different words to different groups, thus creating bias by perpetuating certain labels.

Human-in-the-loop AI teams like Aya Data are helping overcome these challenges by applying greater human scrutiny and analysis to datasets. However, there’s still much work to be done.

Plagiarism and Copyright

AI image generators have whipped up a storm as users turn copyrighted images, such as Mickey Mouse, into their ‘own’ custom artwork. Artists have been generating images of copyrighted material in protest that AI image generation is tantamount to theft of original work.

How do we deal with AI copyright issues?

Someone steal these amazing designs to sell them on Mugs and T-Shirts, I really don’t care, this is AI art that’s been generated,” one artist wrote. “Legally there should be no recourse from Disney as according to the AI models TOS these images transcends copyright and the images are public domain.”

It’s a similar story with text generation, as much of the data used is probably not provided with explicit permission. While the data used by these AIs is deemed ‘public data,’ what that means is vague, to say the least.

AI regulation is likely to change the way many interact with public AIs like GPT-3, DALL-E, etc, but in the meantime, the jury is out.

Outputs can Become Dated

Most language models capable of producing human-like text currently can’t access new information or browse the internet. They can only provide information based on what they were trained on, which was a snapshot of the internet taken in 2021.

This means that language models can provide information and answer questions about a wide range of topics, but responses will be limited to the information and knowledge that was available at the time training data was collected.

Eventually, language models may be able to combine training data with real-time data to provide up-to-date answers. Right now, users have to accept that models like GPT-3 and Chat GPT can’t access new information created after their training datasets.

The Verdict

Modern AI language models quite literally speak for themselves. Their ability to create human-like text in seconds is one of AI’s greatest accomplishments. Eventually, these models will enable a whole host of accessible, advanced workflows that can be controlled via natural text.

While the limitations of AI language models remain contentious, they will inevitably progress beyond whatever barriers we perceive today.

Ethical issues and regulation will probably outlast technical limitations, however, but the industry is developing rapidly from a technical perspective.