Content moderation has long been a challenge, but in recent years, it has become increasingly urgent. With growing pressure from regulators and the public, tech giants like Google and Facebook have been compelled to take stronger action against offensive, hateful, illegal, and otherwise harmful material.

While often conflated with censorship, content moderation is broadly supported by both governments and the public, who generally agree that some level of oversight is necessary to maintain safe and respectful online spaces.

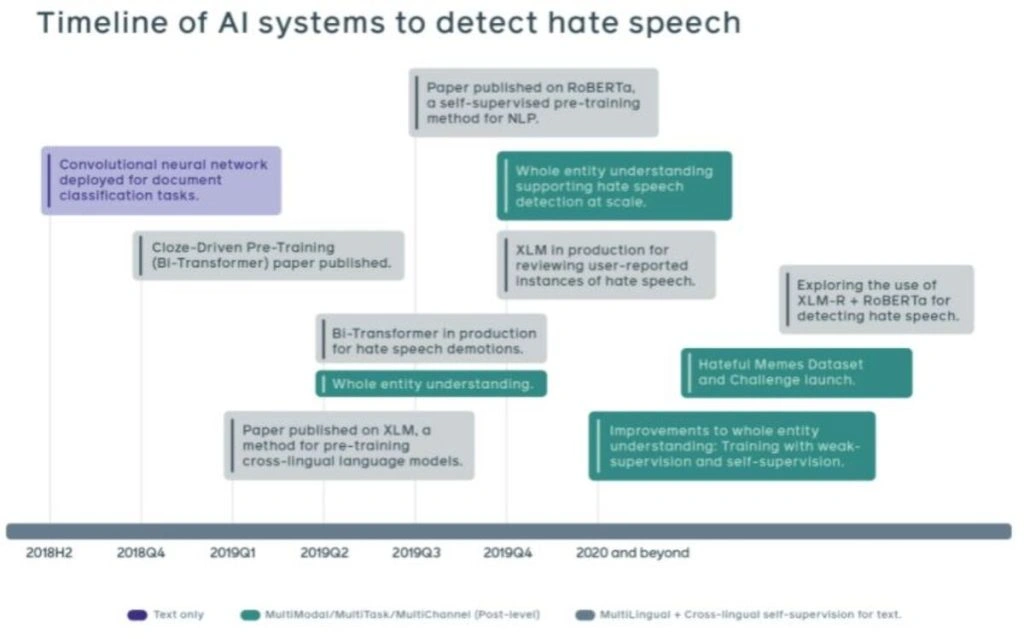

As such, Facebook, Google and other key stakeholders have continued to iterate increasingly advanced forms of AI to assist in the battle against harmful content, the most recent and best model being XLM-RoBERTa (XLM-R) which marks significant improvements on former NLP ace BERT.

The Shift From Reactive to Proactive Moderation

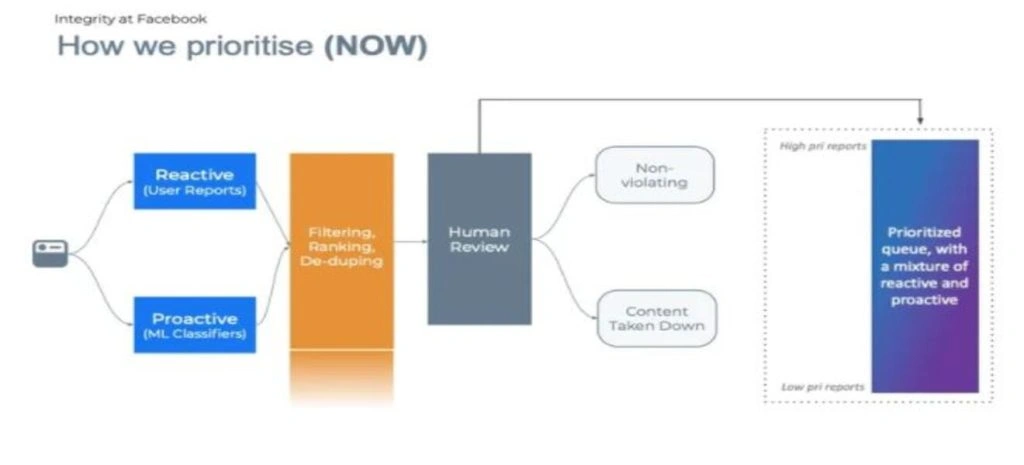

The paradigm shift that NLP facilitates is the move from reactive moderation techniques to proactive moderation. Proactive pre-moderation is the process of moderating content before it’s posted as opposed to reactive moderation which relies on community reports.

In pre-moderation, AI triages content to pre-filter it prior to manual analysis by a human. This reduces the burden on humans, whilst ensuring that human teams still have the final say over pre-screened content. Facebook’s new content moderation system aims to remove the most damaging content before the least, placing both reactively and proactively screened content in the same queue.

In 2020, Facebook estimated that some 88.8% of hateful content is proactively flagged by AI, representing an increase from 80.2% in the first quarter of 2020. The current content moderation system has been criticised for being too effective, or overdeleting, but its reception has been generally positive.

The content moderation system used by Google is perhaps even more multifaceted as it requires moderation action over Google search as well as Maps, YouTube, Ads and many other products. Google partly moderates for content during the indexing process but also uses an array of proactive approaches to remove user-generated content (UGC) across its other platforms.

The Issue of Volume

In 2020, Forbes reported that Facebook’s content analysis and moderation AI flagged over 3 million pieces of potentially offensive content that violated their community standards every day. Facebook employs some 15,000 content moderators who have to sift through millions of pieces of content, either affirming or denying the AI’s suspicions. Zuckerberg admitted that some 1 out of 10 pieces of content slip through the net and are allowed to go live, but believes the very worst content is filtered out with a near-100% success rate.

Whilst modern moderation AIs are exceptionally efficient at working at scale, this doesn’t alleviate the human burden of content moderation and the reliance on end-point human involvement is likely to persist. The work stressors that those content moderators have to endure whilst working on the ‘terror queue’ (the moderation queue of the very worst content) has also received considerable coverage in recent years.

The Issue of Content Diversity

An Ofcom paper on content moderation highlights the difficulties presented by our ever-expanding content universe. NLP algorithms are now learning to interpret nuances such as sarcasm marked by emoji uses without the need for human data labelling, and can accurately derive meaning from short video clips and gifs, but the development of new offensive slang and hateful imagery presents an ongoing challenge.

Modern content moderation algorithms now have both the depth and breadth to understand advanced multi-modal content types with deep semantic connotations, but false-positive incidence rates remain high, and AI is struggling to keep up with the lengths hate content creators are willing to go to keep their content under the radar.

Moreover, there is considerable diversity in language and imagery itself. The ‘Scunthorpe problem’ and numerous related problems highlight the difficulty of applying simple logic to classify strings of offensive words. The risk of false positives remains high and concern has been raised over whether fully automated moderation would lead to cultural or linguistic discrimination.

There has been major progress in tackling false positives. For example, image and video content moderation APIs like Amazon’s Rekogniton use two-layer video labelling and audio labelling hierarchies to accurately discern harmful images from closely related non-harmful images, e.g. breastfeeding women from sexually suggestive content.

The Issue of Freedom of Speech and Ethics

Automated pre-moderation of publicly accessible content imposed by multinationals and governments presents an ethical problem. Corporations like YouTube are tightening their grip on creators that spread misinformation and conspiracy theories, but classifying content as either of these things is likely only possible after a rigorous interrogation of that content rather than a cursory glance.

There has also been considerable debate about where such practices intercept with human rights laws, with critics highlighting how increased false positives – or ambiguous decisions – are robbing people of their public voice and sometimes even their careers. GDPR requires ‘data subjects’ to be informed of when their content is removed via automated procedures – privacy regulations further complicate the bounds between corporate responsibility and censorship.

Summary: Can AI Solve The Problem of Content Moderation?

On a practical level, AI is already helping to filter out content that most people agree should not be publicly visible. However, the leap from assistive moderation to fully autonomous, pre-moderation AI remains uncertain.

For now, AI serves as a powerful support tool-streamlining workflows for human moderators who still make the final judgment calls. While no system is flawless, keeping humans in the loop remains the most reliable and least risky approach.