Excellent machine learning models aren’t merely born working – they’re trained. And like any training regime, quality matters.

Behind every successful AI system sits a carefully curated dataset that taught it how to recognise patterns, make decisions, and generate predictions. Finding the right training data can make the difference between a model that delivers transformative results and one that merely produces plausible-sounding mistakes.

Whether you’re building computer vision systems, natural language processors, or predictive analytics tools, the foundation remains the same: your model is only as good as the data you feed it.

Training data is needed for all types of supervised machine learning projects:

- Images, video, LiDAR, and other visual media are annotated for computer vision (CV).

- Audio is annotated and labelled for conversational AIs and technologies with audio sensors.

- Text is annotated and labelled for chatbots, sentiment analysis, named entity recognition, and auto translation.

- Numerical data, text, or statistics are used to train various regression or classification algorithms.

This guide explores where to find “high-quality training data” in 2025, how to evaluate potential datasets, and when to build your own. We’ll cover both traditional sources that have stood the test of time and newer datasets serving present and future generations of cutting-edge AI models.

What Makes a Good Dataset?

A good dataset serves as the cornerstone of any successful machine learning project, balancing quantity with quality, diversity with focus.

The datasets that create breakthrough AI systems don’t just contain mountains of examples – they contain the right examples, properly prepared and carefully balanced to teach models without misleading them.

Here are four fundamental criteria to determine data quality:

Training data is required for all types of supervised machine learning projects:

AI has changed dramatically since we first wrote this article. In 2025, finding good training data is both easier and harder – easier because there’s more of it, harder because picking the right data matters more than ever.

This section highlights the most useful new datasets for machine learning in 2025 and the future.

Text-Based Datasets

- RedPajama-Data-v2 – A massive 30 trillion token dataset from 84 CommonCrawl snapshots across English, German, French, Spanish and Italian. Includes 40+ pre-computed quality annotations for data filtering, making it currently the largest publicly available dataset for LLM training.

- RedPajama-Data-1T – The 1.2 trillion token predecessor to v2, combining data from CommonCrawl, C4, GitHub, ArXiv, Wikipedia and StackExchange. Created as an open-source alternative to replicate LLaMA’s training data.

- The Pile – EleutherAI’s 825GB dataset combining 22 diverse sources including academic papers, code and web text. Though now surpassed in size by newer datasets, it remains influential in open-source AI development.

- Databricks-Dolly-15k – Contains over 15,000 instruction-response pairs written by Databricks employees, specifically designed for instruction-tuning language models to follow complex directions.

- OpenAssistant Conversations – A high-quality conversation dataset featuring multi-turn dialogues with human feedback signals, created collaboratively for building open-source alternatives to ChatGPT.

Multimodal Datasets

- LAION-5B – Contains 5.85 billion CLIP-filtered image-text pairs. Updated to “Re-LAION-5B” in 2024 with improved safety filtering, available in both research and research-safe versions.

- LAION-400M – LAION’s original large-scale dataset with 400 million image-text pairs, still useful for researchers with limited computational resources.

- LAION-Aesthetics – A filtered subset of LAION focused on aesthetically pleasing images, created using a CLIP-based aesthetic predictor model and widely used for training high-quality image generation models.

- WebVid-2M – Approximately 2.5 million video-text pairs collected from stock footage sites, essential for training multimodal models that understand relationships between moving images and text.

- AudioSet – Google’s collection now includes synchronised audio, video, and text annotations, helping models understand how sounds relate to what you see and how people describe them.

- HumanML3D – Pairs written descriptions with 3D human movements, perfect for systems that need to understand or generate physical actions from text instructions.

Other Gen AI/LLM Datasets

- HumanEval – 164 hand-written programming problems designed to evaluate code generation capabilities, originally created to benchmark OpenAI’s Codex model.

- The Stack – A large collection of permissively-licensed source code across multiple programming languages, used to train models like StarCoder and Code Llama.

- LeoLM German Dataset – A specialised collection translated from English to German, demonstrating how foundation models can acquire new languages through targeted training.

- LAION-COCO – A subset of LAION-400M filtered to match Microsoft’s COCO dataset distribution, offering higher quality data for specific computer vision applications.

- LAION-Face – A specialised subset focused on facial images, useful for training face recognition, analysis and generation models with careful ethical filtering.

- Multilingual NLP Repository – Unlike older collections that focused on English, this GitHub resource emphasises dozens of languages for truly global AI.

- MMMU – Tests models on university-level problems across 30+ subjects including medicine, engineering, and humanities. Uses text, images, diagrams, and specialised notation together.

- CLIP 2025 – The updated version includes far more diverse visual concepts and languages than the original.

Be sure to visit HuggingFace’s dataset page for countless other gen AI, CV, and NLP datasets + many more. LAION has also released some of the largest, most prolific datasets available here.

Computer Vision Data

- Roboflow 100 (RF100) – Created with Intel, this project includes hundreds of thousands of images and object types from medical images to aerial photos. It’s particularly good for testing how well your model works across different domains.

- LVIS v1.0 (Large Vocabulary Instance Segmentation) – Released in 2023, this Facebook AI Research dataset contains 2.2+ million high-quality instance segmentation masks across 1,000+ object categories in 164,000 images, specifically designed to address long-tail detection challenges.

- nuImages – The latest addition to the nuScenes family, featuring 100,000 2D images with comprehensive annotations including semantic segmentation, depth maps, and 3D bounding boxes for autonomous driving applications.

- Open Images V7 – The update of Google’s dataset now includes 9+ million images with annotations across 600 object classes, adding relationship annotations and densely annotated visual segmentation.

- Re-LAION-5B – Released in 2024, this is the safety-revised version of LAION-5B containing 5.85 billion image-text pairs, available in research and research-safe versions with enhanced content filtering.

- BDD100K (Berkeley DeepDrive) – The 2023 update includes 100,000 driving videos with comprehensive annotations including lane markings, drivable areas, and full-frame instance segmentation, specifically targeting autonomous driving in diverse conditions.

- Places365-Standard – Updated in 2023, this scene-centric database contains 1.8 million images from 365 scene categories, designed for training scene recognition models.

- Objects365 – Features 365 object categories with 638,000 images and 10 million annotated instances, offering greater diversity than previous datasets for detection tasks.

Medical Data

- MIMIC-III – A detailed ICU dataset with everything from vital signs to doctor’s notes, medications and outcomes. Perfect for building clinical prediction systems.

- OASIS – This brain scan collection now includes 1,098 subjects with both MRI and PET scans over time, making it ideal for tracking how conditions progress.

- OpenNeuro – A free platform with 716 public brain datasets from 27,482 people, covering MRI, PET, MEG, EEG, and iEEG data. All in standard formats with proper quality control.

- BioASQ – Annotated biomedical articles specifically designed for medical NLP.

- CORD-19 – Originally built during COVID, this collection of coronavirus research papers keeps growing and now has one of the best structures for medical text mining.

Financial AI Data

- S&P 500 Stock Data (Yahoo Finance) – One of the most reliable datasets for developing financial ML models, containing historical data from the S&P 500 index including companies like Apple, Microsoft, and NVIDIA with decades of daily, weekly, and monthly price information.

- Kaggle Cryptocurrency Dataset – Comprehensive historical price data for cryptocurrencies from Bitcoin to lesser-known altcoins, including daily open/close prices, trading volumes, and market capitalisation metrics.

- IMF Datasets – Hundreds of IMF datasets with filters for countries and purposes.

Satellite Imagery Collections

- PANGAEA Benchmark – A new collection specifically designed for benchmarking Earth Observation Foundation Models, hosted on the Earth Observation Training Data Lab (EOTDL).

- SSL4EO-S12 (and many others) – A large-scale dataset for self-supervised learning in Earth observation that helps models develop robust feature representations.

- Roboflow 100 (RF100) – Contains over 224,000 images spanning 800 object types from medical images to aerial photos, excellent for testing how well computer vision models generalise across domains.

- LoveDA (and others on the link) – 5,987 high-resolution image chips from Google Earth with 7 landcover categories and 166,768 individual labels across 3 Chinese cities, designed for semantic segmentation tasks.

- FloodNet (and many others) – 2,343 UAV images captured after Hurricane Harvey with landcover labels across 10 categories, critical for disaster response and climate change applications.

- Image Analysis and Data Fusion Technical Committee (IADF TC) of the Geoscience and Remote Sensing Society – Numerous diverse geospatial and earth observation datasets for different applications and time periods.

Better Data, Better Ethics

In 2025, we’re finally taking data quality and ethics seriously:

- FairFace – A face image dataset with carefully balanced demographics, designed specifically to reduce racial bias in facial recognition.

- Kaggle’s Bias Detection Challenge – Helps researchers spot and fix biases in AI systems with examples of biased and unbiased outputs.

- EthicalML Framework – Tools for evaluating and improving data quality, representativeness, and ethical soundness.

Making Your Own Data: Synthetic Generation

Perhaps the biggest change in ML since our original article is how good synthetic data has become. Instead of collecting real-world data (with all its privacy headaches), many teams now generate artificial datasets that match the statistical properties of real data without the legal risks.

Leading Synthetic Data Tools:

- NVIDIA Omniverse Replicator – Creates photorealistic synthetic data for AI perception, particularly strong for autonomous vehicles, robots, and medical imaging. Can simulate entire environments with proper physics and lighting.

- Synthesis AI – Specialises in generating synthetic human data – faces, bodies, and behaviours – without the ethics problems of using real people’s images.

- Google’s Synthetic Suite – Generates synthetic tables, text, and medical images while keeping the statistical relationships intact.

See our full exploration of synthetic training data here.

The Horizon: What to Watch for Beyond 2025

The future of training data is already taking shape in research labs worldwide. Four key developments stand out for organisations looking to stay ahead:

- Neuromorphic Training Sets: New brain-inspired computing chips require specially formatted datasets that mirror neural processes. Early adopters are developing training data specifically optimised for these unique architectures, potentially offering massive efficiency improvements for the right problems.

- Quantum-Ready Datasets: As quantum computing matures, we’re seeing the first datasets designed explicitly for quantum machine learning algorithms. These datasets are structured to leverage quantum advantages in specific domains like molecular modelling and complex optimisation problems.

- One-Shot Learning Collections: Moving beyond today’s data-hungry models, specialised datasets are emerging that teach AI systems to learn from just a few examples – much like humans. These carefully curated “seed” datasets could dramatically reduce the amount of data needed for training.

- Cross-Enterprise Data Cooperatives – New secure frameworks allow multiple organisations to collectively build powerful datasets without sharing sensitive information. Using advanced cryptographic techniques, these systems enable collaborative learning while preserving privacy and competitive advantages.

Finding appropriate training data is often a challenge. There are many public and open datasets online, but some are becoming heavily dated and many fail to accommodate the latest developments in AI and ML. With that said, there are still several resources of high-quality, well-maintained datasets.

What Is a Good Dataset?

A good dataset must meet four basic criteria:

1: Dataset Must be Large Enough to Cover Numerous Iterations of The Problem

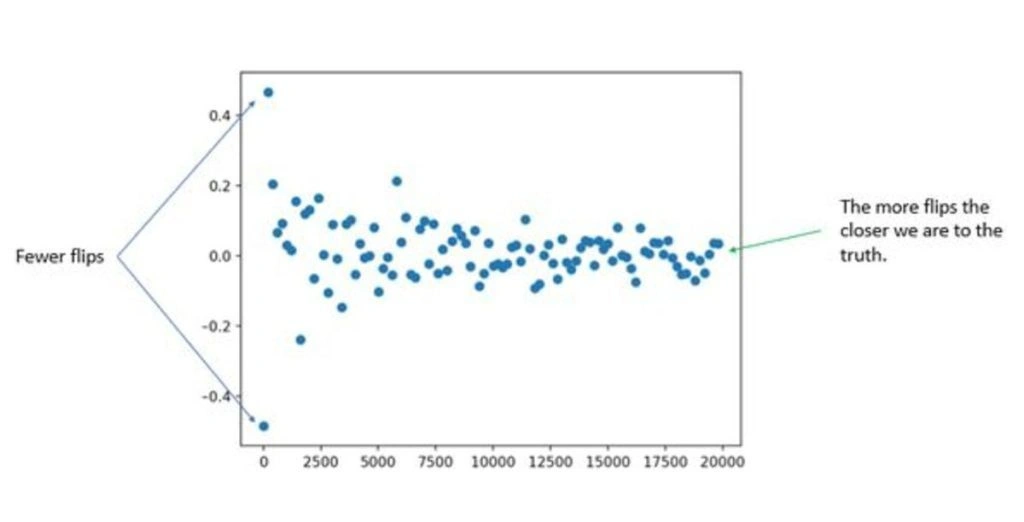

Generally speaking, the larger the sample size, the more accurate the model can be. However, high dataset variance can result in overfitting, which is indicative of an excessively complex model and dataset. Conversely, low variance or sparse data can result in underfitting and bias.

Rather than aiming for more = better, datasets should contain enough information to cover the problem space without introducing unnecessary noise to the model. For example, Aya Data built a training dataset of maize diseases to train a disease identification model, accessible through an app. Here, the problem space is limited by the number of diseases present – we created 5000 labeled images of diseased maize crops to train a detection model with 95% accuracy.

2: Data Must be Well-Labeled And Annotated

Skilled data labelers with domain knowledge can make the project-critical labeling decisions required to build accurate models. For example, in medical imaging, it’s often necessary to understand the visual characteristics of a disease so it can be appropriately labeled.

The annotations themselves should be applied properly. Even simple bounding boxes are subject to quality issues if the box doesn’t fit tightly around the feature. In the case of LiDAR, pixel segmentation, polygon annotation, or other complex labeling tasks, specialized labeling skills are essential.

3: Data Must be Representative And Unbiased

Non-representative or biased data has already impacted public trust in AI. When it comes to AVs, biased training data might be a matter of life and death. In other situations, e.g., recruitment, utilizing biased AIs can result in regulatory issues or even breaking the law.

Numerous models have already failed due to bias and misrepresentation, such as Amazon’s recruitment AI that prejudiced women and several AV models that failed to spot humans of darker skin tone. The lack of diversity in datasets is a pressing issue with both technical and ethical ramifications that remain largely unaddressed today.

AI teams need to actively engage with the issue of bias and representation when building training data. Unfortunately, this is a distinctly human problem that AIs cannot yet fully solve themselves.

4: Data Must Comply With Privacy Regulations

Privacy and data protection laws and regulations, such as GDPR, constrain the use of data that involves people’s identities or personal property. In regulated industries such as finance and healthcare, both internal and external policies introduce red tape to the use of sensitive data.

Public and Open Source Data For ML Projects

Public and open source data can be used, reused, and redistributed without restriction. Strictly speaking, open data is not restricted by copyright, patents, or other forms of legal or regulatory control. It is still the user’s responsibility to conduct appropriate due diligence to ensure the legal and regulatory compliance of the project itself.

There are many types of open datasets out there, but many aren’t suitable for training modern or commercial-grade ML models. Instead, many are intended for educational or experimental purposes, though some can make good test sets. Nevertheless, the internet is home to a huge range of open datasets for AI and ML projects and new models are being trained on old datasets all the time.

Government Datasets

Government-maintained datasets are offered by many countries, including the US (data.gov), the UK (data.gov.uk), Australia (data.gov.au), and Singapore (data.gov.sg). Most data is available in XML, CSV, HTML or JSON format. Public sector data relates to everything from transport and health to public expenditure and law, crime, and policing.

18 Public and Open Source Datasets

Here is a list of eighteen well-known datasets. Some include already-labeled data. This is by no means an exhaustive list, and it’s also worth noting that some of these datasets are becoming quite aged (e.g. StanfordCars) and may only be useful for experimental or educational purposes. You can find some other datasets for NLP projects here.

- Awesome Public Datasets – A huge list of well-maintained datasets related to agriculture and science, demographics and government, transportation, and sports.

- AWS Registry of Open Data – Amazon Web Services’ source for open data, ideal for ML projects built on AWS.

- Google Dataset Search – Google Finance, Google Public Data, and Google Scholar are also mineable for training data.

- ImageNet – A vast range of bounding box images for object recognition tasks, built using the WordNet database for NLP.

- Microsoft Research Open Data – A range of datasets for healthcare, demography, sciences, crime, and legal and education.

- Kaggle Datasets – Probably the go-to for public datasets, of which there are over 20,000 on the site.

- Places and Places2 – Scenes for object recognition projects. Features some 1.8 million images grouped in 365 scene categories.

- VisualGenome – Datasets that connect images to connected language.

- StanfordCars – Contains 16,185 images of 196 classes of cars

- FloodNet – A natural disaster dataset created using UAVs.

- The CIFAR-10 dataset – Contains some 60,000 32×32 images in 10 classes.

- Kinetics – 650,000 video clips of human actions

- Labeled Faces in the Wild – 13,000 faces for face recognition tasks.

- CityScapes Dataset – Street scenes for AV and CCTV models.

- EarthData – NASA’s dataset hub.

- COCO Dataset – Common objects in context dataset for semantic segmentation

- Mapillary Vistas Dataset – Global street-level imagery dataset for urban semantic segmentation

- NYU Depth V2 – For indoor semantic segregation.

Creating Custom Data For ML Projects

It’s also possible to create custom datasets using any combination of data retrieved from datasets, data mining, and self-captured data.

- Image and video footage can be annotated for CV projects. You can find some examples in our case studies – such as annotating ultra-HD satellite images to train a model that could identify changing land uses.

- Text can be taken from customer support logs and queries to train bespoke chatbots. This is how many businesses and organizations train chatbots, rather than using open datasets.

- Audio can be sampled from customer recordings, or other audio sources. This data can then be used to train speech or audio recognition algorithms, or transcribed into other languages.

The advantage of using public and open data is that it’s (generally) free from regulation and control. But, conversely, data mining introduces several challenges for data use and privacy, even when working with open-source data. For example, mining public data from the internet and using that to construct a dataset might be prohibited under GDPR even when efforts are made to anonymize data. Many sites will also have internal policies restricting data mining and might be forbidden by their robots.txt.

In contrast, if a company, business, or organization possesses its own data (e.g. from live chat logs or customer databases), then this can be used to construct custom datasets.

Working With a Data Sourcing and Labeling Partner

If you’re looking to create custom, bespoke data, data labeling services can label data from existing datasets, create entirely new custom datasets, or employ data mining techniques to discover new data.

Leveraging the skills and experience of data sourcing and annotation specialists is ideal when you need a data set to cover a broad spectrum of scenarios (e.g. edge cases), or when control over intellectual property is needed. Moreover, labeling partners can accommodate advanced labeling projects that require domain knowledge or particular industry-specific skills.

Training models is usually an iterative task that involves stages of training, testing, and optimizing. Whether it be adding new samples or changing labeled classes and attributes, being able to change training data on the fly is an asset.

Labeling partners help AI and ML projects overcome the typical challenges involved with using inadequate pre-existing training data.

The Human In The Loop (HITL)

Whatever the demands of your project, you will likely need a human-in-the-loop workforce to help train and optimize your model.

From bounding boxes, polygon annotation, and image segmentation to named entity recognition, sentiment analysis, and audio and text transcription, “Aya Data’s” HITL workforce has the skills and experience required to tackle new and emerging problems in AI and machine learning.

Finding the Right Training Data For Your Machine Learing Project

The hunt for proper training data remains critical for machine learning success. While the datasets available in 2025 are vastly improved – with options like synthetic generation now mainstream – the core principles haven’t changed.

Good training data still needs to be:

- Comprehensive yet focused on your specific problem

- Properly annotated by people who understand the domain

- Diverse and representative to avoid harmful biases

- Compliant with relevant regulations

The best solution often combines public datasets, custom collection, and human expertise. Public datasets give you a head start, but rarely fit perfectly. Custom data collection targets your exact needs but takes time and resources.

Throughout the entire process, human expertise remains essential. The human element is particularly crucial through HITL (Human-in-the-Loop) workflows.

These structured feedback processes help AI systems learn from their mistakes and continuously improve. Even with the most advanced synthetic data, the judgment and context that humans provide remain irreplaceable.

Partner with Aya Data to transform your raw data (text, images, video, audio) into AI-ready datasets. Our expert team delivers high-quality annotations to build smarter AI.