Audio and text transcription has long been a cornerstone of machine learning. There are two core functions of audio and speech transcription, which partly sit within the natural language processing (NLP) discipline of artificial intelligence.

The first function is turning speech into written language (speech to text, or STT), which is useful in assistive reading writing and often used in industries and sectors such as journalism, marketing, healthcare, the legal industry and corporate communications. STT can also be used to convert one language into another.

The second function is converting text to speech (TTS), the opposite of STT, and is useful for creating human-like voices from text data.

This article explores the current and future uses of speech recognition and the hurdles we must overcome to train inclusive models.

What is Speech Recognition?

Speech recognition is best defined as the technology that enables machines to identify, interpret, and convert spoken language into written text. They use natural language processing (NLP) and machine learning algorithms to analyze “audio inputs”, “recognize speech patterns”, and “transcribe spoken words” accurately. Speech recognition is commonly used in applications like virtual assistants (e.g., Siri, Alexa), voice typing, call center automation, and accessibility tools.

What is Voice Recognition in AI Used For?

Voice recognition in AI is used to identify and process human speech, enabling machines to understand, interpret, and respond to spoken commands. This technology powers a wide range of applications, including virtual assistants (like Google Assistant, Siri or Alexa), voice-activated search, hands-free device control, transcription services, customer service automation, and accessibility tools for individuals with disabilities. By converting speech into text or actionable commands, voice recognition enhances user experience and enables more natural human-computer interactions.

There have been a few issues, though, like translating speech from noisy environments. And then, automatically translating one language into another from an audio transcript has only recently been accomplished with high accuracy.

Voice recognition has many large and small-scale uses, from automatically transcribing doctor-patient conversations and courtroom proceedings to providing on-demand translation between different languages. One of the most common commercial uses in smart home devices and voice assistants like Alexa.

Voice recognition is crucial for smart home technology

Some uses of voice recognition AI and ML include:

- Auto transcription for medical, courtroom, journalistic and other purposes.

- Digital assistants and conversational AIs such as Siri and Alexa.

- Smart home technology.

- Voice recognition in voice-controlled technologies.

- Converting voice to text (STT) for hands-free and assisted typing and device operation.

- Surveillance for anti-crime and military purposes.

- Voice recognition for commercial intelligence, e.g., eavesdropping for the sake of advertising, marketing, and personalized recommendations.

Following on from the last example, many people have alleged that phones and other mobile phones listen to conversations for the sake of targeted advertising and other forms of intelligence.

This unsurprisingly caused a media stir, with many claiming that Big Tech was invading people’s privacy and possibly contravening privacy regulations like “GDPR”.

Big Tech and various news stories combatted these allegations by saying advertisers don’t need to eavesdrop to effectively target ads – they collect enough authorized data to do that already.

How Does Speech-Targeted Advertising Work?

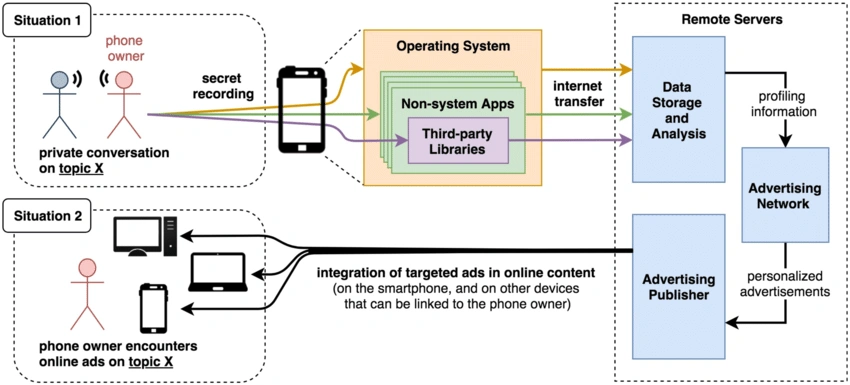

To collect data, certain apps and services activate the phone’s microphone and listen for certain keywords that are forwarded to remote servers for server-side processing.

They’re sent to advertising networks and other services, which convert keywords into personalized and targeted ads.

These ads are then integrated into online content. Another observation is that keywords we mention in conversation increase the predictiveness of Google or YouTube searches. The below diagram illustrates how this is possible.

Finding a clear answer to this is difficult, but a couple of studies and some authoritative sources state it’s highly likely that marketing data is at least ‘enriched’ by soundbites captured by the device microphone.

As we can see below, dissonance in the media about this topic is common. Anyone who’s discussed a specific topic only to find immediate suggestions or ads based on their conversation has probably made their mind up already.

Media dissonance surrounding voice recognition

One thing is for sure: voice recognition is getting better.

But, like most AIs, it has both positive and negative uses that researchers must navigate if they’re to emphasize the benefits and mitigate the risks.

Voice Recognition Today: OpenAI’s Whisper

In September 20220, OpenAI, the developers behind many innovative public AIs such as DALL-E and GPT-3, released Whisper.

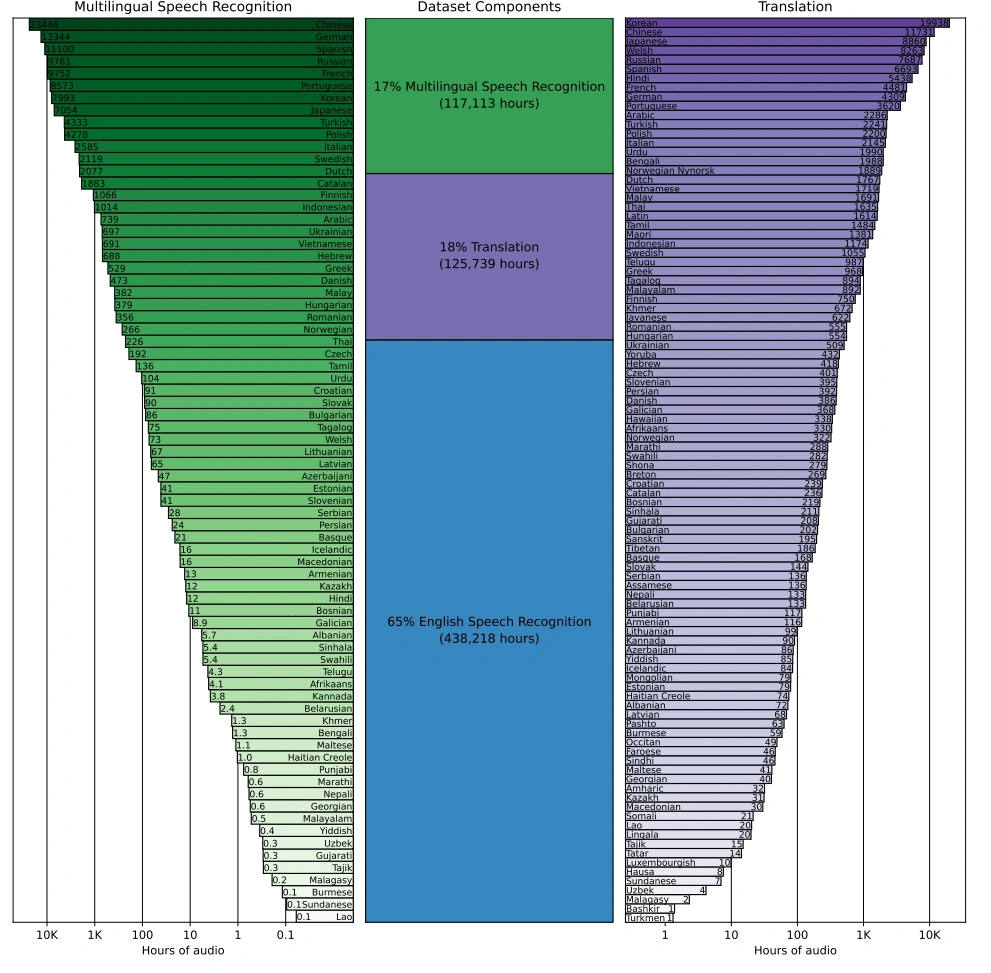

In OpenAI’s words: “Whisper is an automatic speech recognition (ASR) system trained on 680,000 hours of multilingual and multitask supervised data collected from the web.” That equates to some 77 years of speech.

Like other transcribers, the model takes speech and transcribes or translates it into English. In essence, OpenAI is not technologically groundbreaking – Apple and Google have embedded sophisticated ASR into services and devices for years.

But, unlike competitors, not only is Whisper open source but it’s also trained on “multitask” audio that contains background noise ranging from environmental noise to music. You can speak into it quickly, with accents, background noise, etc., and it still produces reliable results. OpenAI provides an example of Whisper transcribing Korean from a K-pop song to illustrate this.

Moreover, Whisper enables users to transcribe audio files without uploading them to any internet services – useful for transcribing potentially private or sensitive audio.

The Challenge of Bias In Voice Recognition

There is substantial evidence that AI bias affects voice recognition performance and outcomes.

Recognizing naturally spoken language is exceptionally difficult when you factor in multiple dialects, accents, slang and local, regional, and national conventions, and other variations.

For example, take Canada, which has a relatively small population. There are around eight active English dialects, two French dialects, and the Michif language of the Métis people. Then, you can find significant differences in language based on locality, gender, age, and other demographic and socioeconomic factors.

Another example is the tremendous variation between southern and central English accents and northern English, Welsh, Irish and Scottish accents in the United Kingdom and Ireland. This well-known comedy sketch describes what happens when a Scottish man enters a voice-controlled elevator:

It’s not just accents and dialects – new language develops all the time, and there’s no reliable way to update AIs with new vocabulary. Some 1,000 new words are officially added to the English lexicon every year, and new slang is created constantly.

It’s not just accents and dialects – new language develops all the time, and there’s no reliable way to update AIs with new vocabulary. Some 1,000 new words are officially added to the English lexicon every year, and new slang is created constantly.

A familiar issue arises here: complex AIs require more hand-picked and hand-annotated data that fill training gaps but at the scale required for neural networking.

Semi-automated or programmatic labeling is one solution, or teams could create small ultra-detailed sets of hand-annotated data. Currently, there is no single solution for building voice recognition.

Poor voice recognition performance for certain groups is not just a theoretical matter either; it has real impacts that can worsen, exacerbate existing bias or create bias and prejudice where there was none before.

The Impacts of Biased Speech Recognition

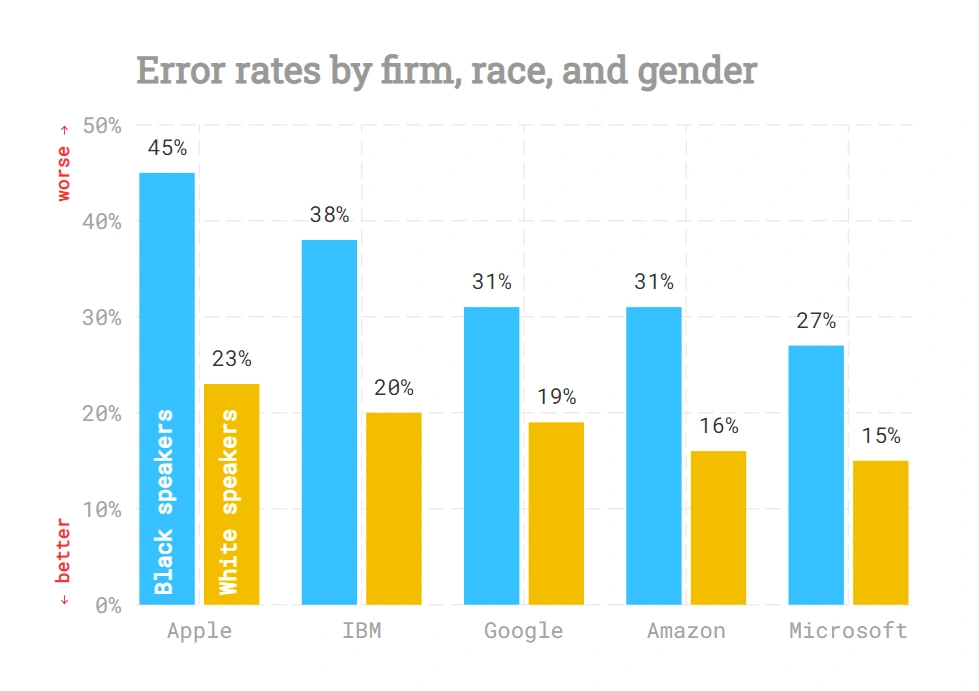

A milestone Stanford study named Racial disparities in automated speech recognition analyzed thousands of audio snippets of white and black speakers through speech-to-text services by Amazon, Apple, Google, IBM, and Microsoft. They found significantly higher error rates for black speakers across all five company’s AIs.

Speech recognition error rates – Stanford

This severely impacts the use some groups get out of voice recognition, which is a growing issue considering how such devices are becoming hard to avoid.

Halcyon Lawrence, an assistant professor of technical communication and information design at Towson University, has a Trinidadian and Tobagonian accent and says, “I don’t get to negotiate with these devices unless I adapt my language patterns..that is problematic.” He argues that he has to adapt his accent – and therefore his identity – to conform to something the AI can understand.

Bias Hits Vulnerable Communities the Hardest

Some point out particularly vulnerable communities, such as those with poorly understood voices and vernacular, who are also disabled and have to rely on inadequate voice recognition to live.

Smart home technology has the capacity to assist people with their living, but if it repeatedly fails for already-marginalised groups, then its potential is severely limited. For example, this study found that speech recognition performs poorly for ageing voices.

Further, research shows that accent affects whether jurors find people guilty and even influences whether or not patients find their doctors competent. Baking in these types of biases and judgments into AI could have disastrous results.

These issues are analogous to other disciplines of AI and ML, such as the bias exhibited by various AIs that learn from internet data, biased policing algorithms, and recruitment AIs that are biased against women and some ethnic backgrounds.

The impacts of bad AI are a pressing issue that the industry must seek to prevent and mitigate.

What Can We Do?

Maximizing fair and equitable representation in datasets is a top priority. A large proportion of the issue is caused by poor representation in the data itself. By building more inclusive and diverse datasets that represent all groups, AI and ML models can be trained to benefit everyone.

AI and ML are still in their infancy, and the datasets required to train complex models are not adequate, and often, they don’t exist at all. The lack of quality datasets has seen companies like “Open AI “turn to public internet data, but it comes with its own unique risks attached – read more here.

Building diversity and inclusion into AIs has become an international research movement, and the consensus is datasets need to serve the entire population, which is where data labeling and annotation providers like Aya Data help.

Our human-in-the-loop labeling teams understand the importance of creating diverse and inclusive datasets that draw upon both domain experts and the skills and intuition of our team. Contact us to get a free quote on our speech recognition service (Aya Speech).