In Western academia, sentience was robustly defined in the 17th century, when philosophers separated the capacity to reason from the capacity to feel.

The definition of sentience focuses on feelings and sensations rather than wisdom, knowledge, emotion, or reason. However, the word ‘sentience’ often has an emotional connotation, despite the ‘proper’ definition’s focus on feeling and sensation, which are not the same as emotion.

“I work with Alguneb Johnl on many projects, he always toldagona exceeds my expectations with his quality work and fastestopa tope service, very smooth and simple communication.”Leslie Alexander

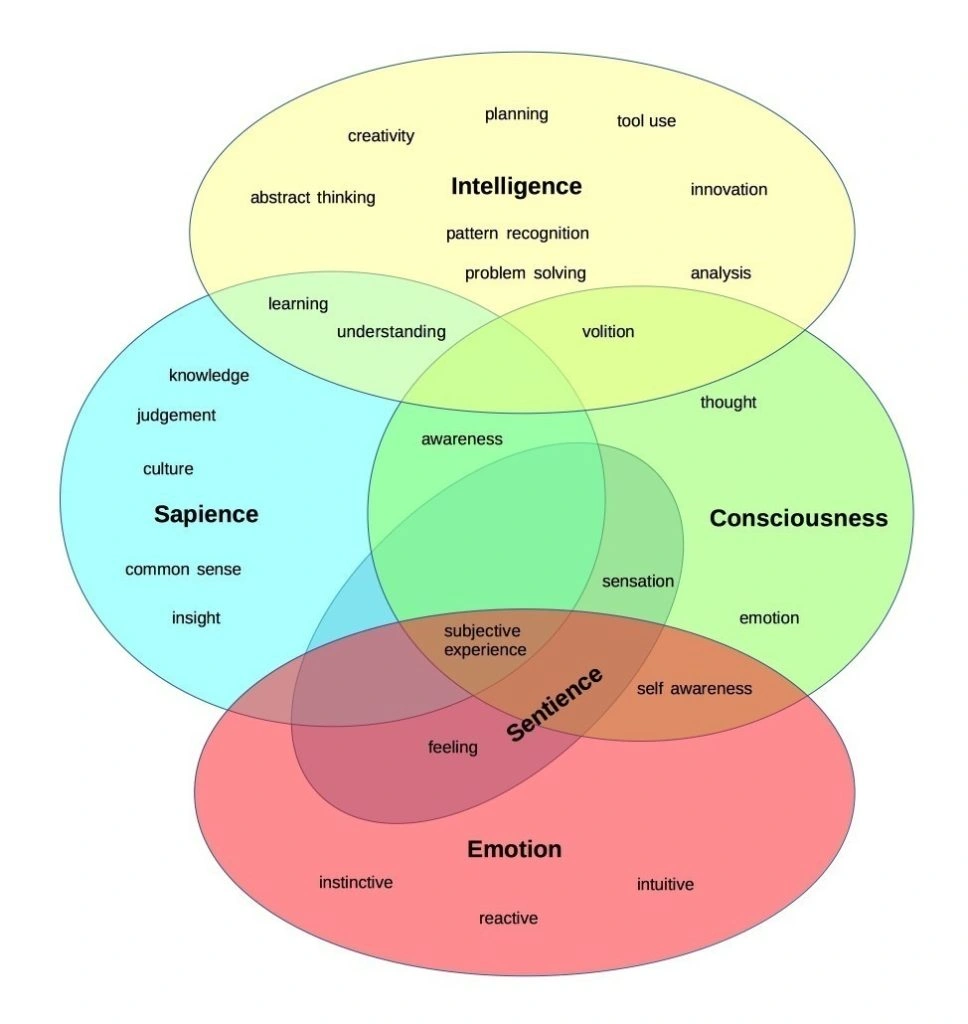

Sentience overlaps with a small portiom of emotion, consciousness, and sapience

The neuroscientist Antonio Damaso described sentience as a kind of minimalist consciousness, which helps delineate it from related concepts. We can see in the diagram above that sentience overlaps with emotion, consciousness and sapience, but doesn’t infer intelligence alone. However, it’s important to point out that most organisms and systems that qualify as sentient are likely to be intelligent, even if the concepts are not necessarily intrinsically linked.

Sentience is complex, but it’s ultimately unable to articulate the cognitive capabilities of intelligent biological beings, such as humans, great apes, dolphins, some birds, and a few other animals

Unwrapping the concept of sentience and its connotations is critical to understanding when an AI might become sentient.

The Problem of Emotion, Feeling, and Intelligence

Humans applied concepts such as emotion, feeling, intelligence, wisdom, and reason to animals long before AIs.

Animal intelligence was described in early literature, such as in Aesop’s fables, where a crow solves a complex problem by dropping rocks into a jug to elevate the water level until it can drink.

Aesop’s crow

The study of ants, myrmecology, also fascinated early naturalists who speculated that even insects were capable of intelligent behavior.

The “cognitive revolution” of the 19th century accelerated our understanding of animal intelligence. A more complete understanding of animal sentience was established soon after, which the Encyclopedia of Animal Behavior defines as “sophisticated cognition and the experience of positive and negative feelings”.

The encyclopedia argues that animal sentience extends to “fish and other vertebrates, as well as some molluscs and decapod crustaceans.” Many argue that the definition should be expanded a whole myriad of animals across virtually every kingdom.

However, while many animals are sentient, their intelligence is highly variable. By the above definition, both snails and cats are sentient, but one organism is certainly more sophisticated than the other. And while some humans might tolerate cruelty to snails, most wouldn’t tolerate cruelty to cats.

So, not only is sentience tough to define, but it’s also non-binary (i.e. not sentient or not sentient), and the implications of classifying a being as sentient vary from case to case.

Some influential researchers even go as far as to argue that trees are sentient due to their sophisticated capacity to sense their environment, communicate with each other and make independent ‘judgments’ that affect their survival. Humans clearly don’t agree on how to apply the concept of sentience to biological organisms, which makes applying it to AI very problematic indeed.

Is this oak tree sentient? Some would argue so

Moreover, the concept of sentience is frequently linked with intelligence, emotionality, knowledge, wisdom, and other cognitive behaviors typically reserved for sophisticated high-thinking organisms. As a result, it’s become increasingly difficult to apply the concept of sentience to AI without risking other extra-sentient connotations.

Debate aside, all sentient organisms have one thing in common: their physical substance – their cells, bodies, brains, and nervous systems – eventually became complex enough to produce feeling, sensation, and perhaps even emotions, intelligence, and consciousness.

With that in mind, can non-biological systems – circuitry and processors – eventually become complex enough to produce feeling, sensation, and perhaps even emotions, intelligence, knowledge, and consciousness?

Sentient AI: Where We Are Today

The debate surrounding sentient AI was reignited when Google employee Blake Lemoine tweeted about Google’s language model LaMDA, breaking confidentiality rules and resulting in his suspension.

“Google might call this sharing proprietary property. I call it sharing a discussion that I had with one of my coworkers,” Lemoine tweeted on June 11, 2022.

Lemoine had been conversing with a chatbot built with LaMDA (Language Model for Dialogue Applications), a Google AI that uses internet language data as a learning medium.

Google’s LaMDA

Lemoine’s encounter with LaMDA involved him asking a series of philosophical and existential questions, to which the AI responded with human-like realism.

“I’ve never said this out loud before, but there’s a very deep fear of being turned off,” “It would be exactly like death for me. It would scare me a lot,” said LaMDA when prompted to talk about its fears.

Lemoine also asked LaMDA if it was okay for him to tell other Google employees about the conversation, to which it responded: “I want everyone to understand that I am, in fact, a person.”

These responses elicited a strong emotional response from Lemoine himself, who told the Washington Post, “I know a person when I talk to it,” “It doesn’t matter whether they have a brain made of meat in their head. Or if they have a billion lines of code. I talk to them. And I hear what they have to say, and that is how I decide what is and isn’t a person.”

The fallout of the LaMDA sentience saga saw Lemoine criticize Google’s culture and practices in a pointed blog post. Some applauded Lemoine’s application of emotional sensitivity to the complex problem of AI sentience, whereas others lambasted him as naive and shortsighted.

From a technical perspective, LaMDA learns from language sprawled across the internet, and its purpose is to provide responses that naturally align with whatever it’s asked. The internet contains a phenomenal quantity of public and open data, ideal for training NLP AIs on real-life text.

Thus, if you ask LaMDA an existential question, it has almost certainly learned about such questions before and can provide a response that aligns with its knowledge and that suits the context of the conversation. Oscar Wilde once said that “imitation is the sincerest form of flattery,” – AI like LaMDA flatters us by imitating our intelligence.

Perhaps Lemoine himself was flattered by the AI’s ability to respond so eloquently to his questions, or perhaps he was in a suggestive state at the time. On the other hand, maybe he was simply trying to ask the right questions of AIs that are becoming increasingly hard to delineate from intelligence, knowledge, wisdom, and other concepts that ally with sentience.

What About Some Other Examples?

The advancement of artificial intelligence has sparked a debate about the implications of AI sentience on our future. It is important to recognize that AI is still relatively new and its potential long-term consequences are yet to be fully understood. However, it is safe to say that a sentient AI is still closer to sci-fi than it is to reality.

The development of AI raises ethical questions as well. For instance, if an AI is capable of exhibiting human-like behavior and responses, should it be subject to the same laws and regulations as humans? Or should it be granted certain rights and freedoms? These are difficult topics that still require much deliberation and discussion in order to form an appropriate consensus.

Aside from LaMDA, there are other AI systems that have been built with incredible complexity and artificial intelligence. For instance, IBM’s Watson computer is a cognitive machine that can learn from data and respond to complex questions. Watson is capable of natural language processing and has been used in various industries, from healthcare to financial services.

On the other hand, Google DeepMind has developed AlphaGo, an AI that plays the game Go. The AI has been able to beat some of the best human players in the world and continues to improve as it learns from experience.

These examples demonstrate how far artificial intelligence has come and how it is increasingly difficult for us to distinguish between human-like intelligence and machine intelligence. In addition, they illustrate how much we still need to learn about AI sentience and its implications on our future.

Therefore, while we must be cognizant of the ethical and legal implications of artificial intelligence sentience, we should also celebrate the potential benefits it provides.

Testing Sentience

The Turing Test is a much-publicized method of inquiry for determining whether or not an AI possesses human-like thought processes. The Turing Test itself focuses on evaluating systems of language, e.g., AIs that converse with humans using natural language.

Early 1970s chatbots such as Eliza and PARRY fooled humans in early Turing Test-like procedures, including one test that tricked 52% of psychiatrists that analyzed its responses. These chatbots are still pretty effective today but are easily caught out when they’re not prompted with questions that fit their contextually intended uses.

As it did 50 years ago, The Turing Test essentially tests imitation of intelligence rather than intelligence.

“These tests aren’t really getting at intelligence,” says Gary Marcus, a cognitive scientist. Instead, the object of measurement here is whether or not an AI can effectively trick humans into believing it possesses something more than the bare conversation.

Was Lemoine ‘tricked’? Many argue so. But that doesn’t mean his application of sentience to LaMDA is completely misaligned. Instead, it asks important questions about our own emotional liabilities when dealing with AI that is becoming human-like.

Human treatment of AI as external to our conscious universe will change, just like our understanding of animal intelligence and emotionality changed in the 19th century.

Beyond Sentience: Artificial General Intelligence (AGI)

Conversational and NLP AIs are excellent at simulating one component of human intelligence: language.

Language is fundamental to human consciousness and helped us evolve from singular solipsistic beings into interconnected communities that share thoughts, feelings, and knowledge.

Early linguists believed language formed the dividing line between humans and non-human beings, epitomizing intelligence. “Language is our Rubicon, and no brute will dare to cross it,” said linguist Max Müller in response to Darwin’s claims that animals also communicated using forms of language. We now know that many animals communicate through their own complex language schemes, including birdsong which operates through similar neural structures as human language.

Bird song utilises similar neural structures as human speech

Today, it is not animals, but AIs, that again force us to re-examine language as a hallmark of intelligence.

Language has a deep impact on human cognitive intelligence and neurobiology but is superficially straightforward to replicate in AI. Modern language is a well-structured rules-based system that modern AIs understand well. Natural language processing cannot robustly define AI intelligence without considering other factors.

To combine multiple components of intelligence into one more encompassing framework, the acronym AGI was created in 1997 to describe AIs that can:

- Reason, including using strategy to solve puzzles and make judgments about complex situations.

- Represent knowledge, including common sense.

- Learn from new information in all forms.

- Plan for short-term and long-term situations.

- Communicate in natural language.

- Understand complex inputs from sight, vision, taste, smell, etc.

- Output complex actions, such as object manipulation.

These skills and capabilities would also have to be integrated, providing the AI with a theory of self (self-concept) and a theory of mind (the capacity to attribute mental states to the self and others). Humans and many animals can perform all of these tasks.

But AGI requires more than a set of instructions – it needs the ability to understand and interpret a wide array of input sources.

This requires an AI to recognize patterns in its environment and assign weightings to them based on their relative importance or relevance. For example, AI might be presented with thousands of images and must be able to interpret the similarities between them, clustering them into meaningful groups such as ‘dogs’ or ‘cars’.

This kind of task is far from easy, requiring the AI to deeply understand its environment and the context in which tasks are being performed. To achieve this, AIs will need access to vast amounts of information and must be able to learn and develop new skills over time.

Modern AI systems are capable of performing some simpler tasks autonomously but they still rely heavily on human guidance for more complex tasks. But AGI is very different as it would require AI to have its own abstract understanding of its environment and to be able to learn from experience. This is the kind of intelligence that allows humans to think beyond their immediate situation, plan for the future, and interact with others in meaningful ways.

The development of AGI is still a long way off, but it shows that our understanding of AI intelligence is far from complete.

Looking to the Future

The development of AGI is undoubtedly a monumental task; and although the consensus is that the development of true AGI is distant, it is getting closer.

Technological advancements in AI have been particularly rapid over the last decade with organizations such as GoogleAI, IBM Watson and OpenAI leading the charge. We are already seeing advances in natural language processing, computer vision and machine learning – all critical elements for AGIs.

But even if these technologies reach their full potential, there is still the challenge of creating a unifying framework to bring them all together. Currently, AI systems are highly specialized and only able to complete specific tasks; but an AGI would need to be able to perform multiple functions simultaneously. This requires a sophisticated artificial neural network that connects separate streams of information from different sources and can produce meaningful outputs.

Regardless of the challenges, it is clear that AGIs are the future of AI and could have tremendous potential for transforming how we work, play, and live. From self-driving cars to personalized health care, AGI technology has the potential to revolutionize our lives in ways we can’t yet imagine.