Artificial intelligence (AI) has risen from a fringe concept in sci-fi to one of the most influential technologies conceived.

Building systems that can understand visual information has been a cornerstone of AI research and development. This allows machines to ‘see’ and respond to the world around them.

The domain of AI related to vision and visual data is called computer vision (CV). Computer vision equips computers with the ability to process, interpret, analyze, and understand visual data.

Computer vision has many practical applications, ranging from building autonomous vehicles (AVs) to medical diagnostics. Apps equipped with computer vision are ubiquitous, such as Google Lens, which extracts features from images taken with your phone to understand what they are and look them up on the internet.

In this article, we will explore computer vision, how it works, and some of its most exciting applications.

What is Computer Vision?

Computer vision equips digital systems with the ability to understand and interpret visual data.

The fundamental goal of computer vision is to create machines that can “see” and interpret the visual world.

Visual data includes both visible light, which humans can see, and other types of invisible light such as ultraviolet and infrared.

The “Computer” and the “Vision”

We live in a world illuminated by the electromagnetic spectrum.

Light emitted by the sun and other light sources strikes reflective materials and enters our eyes. Humans can see but a small portion of the electromagnetic spectrum, called “visible light”.

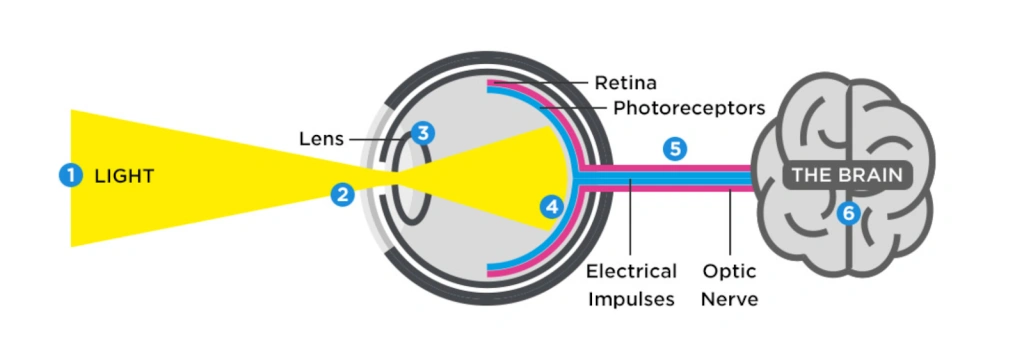

Our retina (light-sensitive tissue in the eye) contains photoreceptors that turn light into electrical signals, which are then passed to our brain.

In many ways, this is the easy bit. The eye is the lens – it fulfils a mechanical function, similar to a camera lens. The first cameras were invented in the early 19th century, and the first video cameras around 1890, around 100 years before competent computer vision technology.

In other words, physically capturing an image is simpler than understanding it.

Computer vision links vision technology, e.g., cameras – the “eyes” – to a system of understanding – the “brain.”

With computer vision, visual imaging devices like cameras can be combined with computers to derive meaning from visual data.

The computer is analogous to the brain, and the vision to the eye.

Early Progress in Computer Vision

The first digital scan was created in 1957 by Russell Kirsch, birthing the concept of the ‘pixel.’ Soon after, the first digital image scanners were built, which could turn visual images into grids and numbers.

In the early 1960s, researchers at MIT launched research into computer vision and believed they could attach a camera to a computer and have it “describe what it saw.” This appears somewhat simple on paper, but the realities of accomplishing it quickly dawned on the computer science community.

MIT’s early investigations into CV triggered a series of international projects culminating in the first functional computer vision technologies.

The first milestone was met in the late 1970s, when Japanese researcher Kunihiko Fukushima built The Neocognitron, a neural network inspired by the human brain’s primary visual cortex.

The pieces were coming together – these early CV systems linked a system of vision, a camera, with a system of understanding, a computer.

Fukushima’s work eventually culminated in the development of modern convolutional neural networks.

Computer Vision Timeline

Computer vision has a short history. Here’s a brief timeline of how computer vision has progressed:

- 1959: The first digital image scanner was invented, enabling the conversion of images into numerical grids.

- 1963: Larry Roberts, considered the father of computer vision, described the process of extracting three-dimensional information about solid objects from two-dimensional photographs.

- 1966: Marvin Minksy from MIT instructed a graduate student to connect a camera to a computer and “have it describe what it sees.”

- 1980: Japanese computer scientist Kunihiko Fukushima built the precursor to modern Convolutional Neural Networks, called the “Neocognitron.”

- 1991-93: Multiplex recording devices were introduced, including advanced video surveillance for ATMs.

- 2001: Two researchers at MIT introduced the first face detection framework that worked virtually in real-time, called Viola-Jones.

- 2009: Google began testing autonomous vehicles (AVs)

- 2010: Google released Goggles, a precursor to Lens. Goggles was an image recognition app for searches based on pictures taken by mobile devices, and Facebook and other Big Tech firms began using facial recognition to help tag photos.

- 2011: Facial recognition was used to confirm the identity of Osama bin Laden after he was killed in a US raid.

- 2012: Google Brain’s neural network accurately recognized pictures of cats.

- 2015: Google launched the open-source machine learning system TensorFlow.

- 2016: Google DeepMind’s AlphaGo model defeated the world champion.

- 2017: Apple released the iPhone X, advertising face recognition as one of its primary new features. Face recognition has become standard in phones and camera devices.

- 2019: The Indian government announced a law enforcement facial recognition app enabling officers to search images through a mobile app. The UK High Court ruled that facial recognition technology to search for people in crowds is lawful.

- To the present day: Computer vision is ubiquitous. Advanced AVs are on the brink of mainstream rollout as of 2023 as Tesla and other manufacturers seek regulator approval.

How Does Computer Vision Work?

Computer vision is a complex process that involves many stages, including image acquisition, pre-processing, data labeling, feature extraction, and classification.

Generally speaking, modern computer vision works via a combination of image processing techniques, algorithmic processing, and deep neural networking.

The process starts with data ingestion, using an image or video feed captured by a camera or some other visual sensor to capture information. Next, images are pre-processed into a digital format that the system can understand.

Initially, the model uses various image analysis techniques, such as edge detection, to identify key features in the image. In the case of a still image, a convolutional neural network (CNN) helps the model “look” by analyzing pixels and performing convolutions, a type of mathematical calculation. Recurrent neural networks (RNNs) are used for video data.

Computer vision with deep learning has revolutionized the capability of models to understand complex visual data by passing data through layers of nodes that perform iterative calculations.

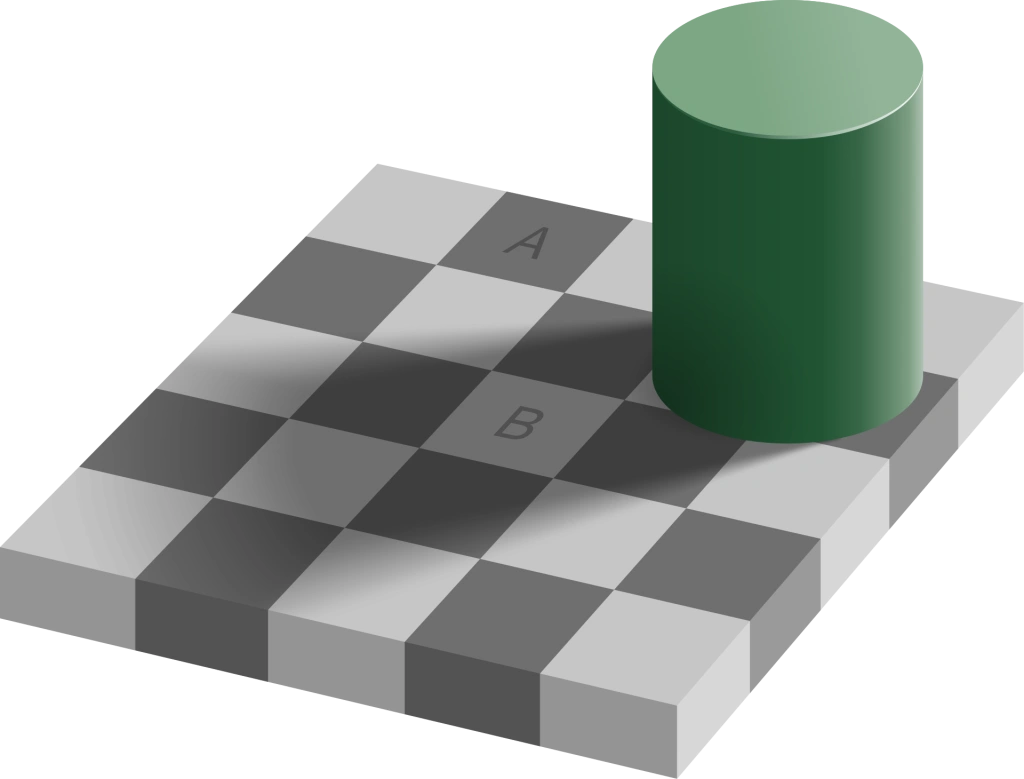

This process is similar to how humans understand visual data. We tend to see edges, corners, and other stand-out features first. We then work to determine the remainder of the scene, which involves a fair amount of prediction. This is partly how optical illusions work – our brain predicts the probable characteristics of visual data similar to CV algorithms.

Comparing Different Approaches to Computer Vision

Image classification, object detection and image segmentation are the two main types of computer vision tasks.

There are several others, and while the list is not exhaustive, the most common tasks include:

1: Image Classification: The task of categorizing images into distinct classes or labels based on their content. For example, classifying images of cats, dogs, and birds.

2: Object Detection: The process of identifying and locating specific objects within an image, usually by drawing bounding boxes around them and associating them with class labels. For example, detecting cars, pedestrians, and traffic signs in a street scene.

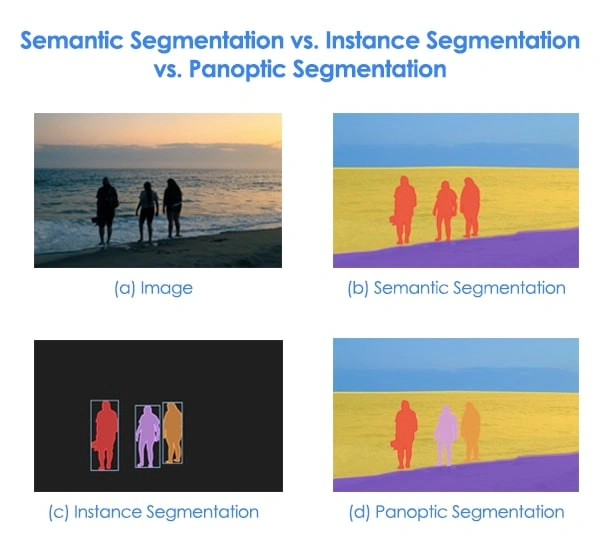

3: Image Segmentation: The task of partitioning an image into multiple segments or regions, often based on the objects or distinct features present. There are two main types of image segmentation:

A) Semantic Segmentation: Assigning a class label to each pixel in the image, resulting in a dense classification map where each pixel is associated with a specific class.

B) Instance Segmentation: Extending semantic segmentation to distinguish and separate instances of the same object class, such as differentiating between multiple cars in an image.

4: Object Tracking: The process of locating and following the movement of specific objects over time in a sequence of images or video frames. This is important in applications like video surveillance, autonomous vehicles, and sports analytics.

5: Optical Character Recognition (OCR): The process of converting printed or handwritten text in images into machine-readable and editable text. OCR is commonly used in document scanning, license plate recognition, and text extraction from images.

The Difference Between Object Detection and Image Segmentation

Understanding the difference between object detection and image segmentation is often particularly tricky.

Object Detection

- Here, the input is typically an image with 3 values per pixel (red, green and blue), or 1 value per pixel if black and white. Training data is labeled with boxes (or polygons) which identify objects.

- An object detection outputs are predicted bounding boxes, defined by the corner position and size

Image Segmentation

- Here, the input is also an image with 3 values per pixel (red, green and blue), or 1 value per pixel if black and white. Training data is labeled with pixel masks which segment objects at pixel level. This can either be semantic segmentation (simpler), instance segmentation (middle) or panoptic segmentation (more complex). Instance segmentation and panoptic segmentation enable models to count instances of objects (often called the ‘things’, whereas semantic segmentation only understands the ‘Stuff’, e.g. “people”, rather than person a, person b, etc.

- The output is a mask image with 1 value per pixel containing the assigned category.

Data Annotation for Computer Vision

Computer vision algorithms and applications can be both supervised and unsupervised

In the case of supervised machine learning, algorithms are trained on a large dataset of labeled images.

During training, the machine learning algorithms learn to recognize patterns in the training dataset in order to classify new images based on similar features.

For example, if you want a model to understand road signs, you first need to teach it what the road signs are.

Here’s a brief rundown of data labeling for machine learning computer vision (CV):

- Purpose of the dataset: Data annotation teams will establish the use case and purpose of the model. For example, Aya Data labeled photos of maize disease to help train a maize disease classification model. In this case, the dataset needs a diverse selection of diseased and non-diseased maize leaves, with a wide range of labels that describe different diseases.

- Annotation types: The annotation of data varies depending on the images and purpose of the model. Common labeling techniques include bounding boxes, polygons, points, and semantic segmentation, among others. Labels are applied to the features the model is expected to identify.

- Annotation complexity: Some tasks require complex annotations. For example, object detection can require precise bounding boxes around objects, while semantic segmentation might require pixel-by-pixel.

Read our ultimate guide to data labeling for more information.

The process of data labeling takes place before any data is fed into the model.

There is a famous phrase: “rubbish in, rubbish out!” To build accurate, efficient models that generalize well, preparing high-quality datasets is essential.

While the model training, tuning, and optimization process is also crucial for building a quality model, the foundations need to be solid, which means producing high-quality datasets.

Get in touch for more information.

Comparing Approaches to Data Labeling for Computer Vision

Depending on the intent of the model, image and video data can be labeled in many ways.

It all depends on what model you’re using.

For example, popular models like YOLO, for object detection, require bounding box or polygon-labeled data.

Image segmentation models such as Mask RCNN, Segnet and Unet, require pixel-segmented labels.

Here are the core types of data annotations:

1. Polygon Annotation

Characteristics

- Involves drawing a polygon around the object by connecting a series of points.

- Captures the true shape and boundaries of the object.

- Can handle complex or irregular shapes.

Advantages

- Provides precise object boundaries, leading to more accurate training data.

- Adaptable to a wide range of object shapes and sizes.

- Useful in handling occluded objects, where only parts of the object are visible.

Use Cases

- Object detection and recognition tasks where accurate object localization is crucial.

- Instance segmentation tasks where individual instances of objects need to be separated.

- Applications where complex shapes or occlusions are common, such as medical imaging or autonomous vehicles.

2. Bounding Boxes

Characteristics

- Involves drawing a rectangular box around the object, encapsulating the entire object within the box.

- Simpler and faster to create than polygons, but less precise.

- Does not capture the exact shape of the object.

Advantages

- Faster and easier to annotate, making it suitable for large-scale datasets.

- Sufficient for tasks where precise object boundaries are not critical.

- Easier to compute, leading to reduced computational costs during model training and inference.

Use Cases

- Object detection and recognition tasks where precise object boundaries are not necessary.

- Applications where simplicity and computational efficiency are more important than precision, such as surveillance or monitoring systems.

3. Segmentation Segmentation

Characteristics

- Involves labeling each pixel in the image with its corresponding class or object label.

- Provides a dense, pixel-wise labeling of the entire image.

- Does not distinguish between individual instances of objects within the same class.

Advantages

- Generates a complete understanding of the scene, capturing both object and background information.

- Provides detailed segmentation of objects, making it suitable for tasks where object boundaries are important.

- Enables models to learn the relationships between different regions and objects in the image.

Use Cases

- Applications where understanding the entire scene is crucial, such as autonomous navigation or environmental monitoring.

- Tasks where precise object boundaries are important, such as medical imaging or satellite imagery analysis.

- Applications that require a holistic understanding of the relationships between objects and their surroundings.

4. Instance Segmentation

Characteristics

- Involves labeling each pixel in the image with its corresponding class or object label while also distinguishing between individual instances of objects within the same class.

- Combines the benefits of both semantic segmentation and object detection by providing pixel-wise labeling as well as instance-level information.

- Handles overlapping objects and can differentiate between multiple instances of the same class.

Advantages

- Enables more precise understanding of the scene, capturing both object and background information as well as instance-specific data.

- Provides detailed segmentation of objects and their boundaries, making it suitable for tasks where instance-level information is crucial.

- Facilitates more advanced applications that require understanding of individual object instances, their relationships, and interactions.

Use Cases

- Applications where instance-level object recognition and understanding is crucial, such as autonomous navigation, robotics, or video analysis.

- Tasks where precise object boundaries and individual instances need to be distinguished, such as medical imaging or satellite imagery analysis.

- Scenarios where overlapping objects or multiple instances of the same class need to be identified and separated, like crowd analysis or traffic monitoring.

5. Panoptic Segmentation

Characteristics

- Combines the tasks of semantic segmentation and instance segmentation to provide a unified and coherent scene understanding.

- Labels each pixel in the image with its corresponding class or object label while also differentiating between individual instances of objects within the same class.

- Handles both “stuff” (amorphous regions like sky, water, or grass) and “things” (countable objects like cars, people, or animals).

Advantages

- Offers a comprehensive understanding of the scene by capturing both object and background information as well as instance-level data.

- Addresses the challenges of both semantic and instance segmentation, providing a more complete picture of the image.

- Enables advanced applications that require a holistic understanding of objects, their instances, and the relationships between different regions in the image.

Use Cases

- Applications that demand a complete and unified understanding of the scene, such as autonomous navigation, environmental monitoring, or urban planning.

- Tasks where both “stuff” and “things” need to be identified and differentiated, like land cover classification or natural resource management.

- Scenarios where a coherent understanding of the relationships between objects, their instances, and their surroundings is necessary, such as disaster response or 3D scene reconstruction.

Applications of Computer Vision

Computer vision has many practical applications across virtually every sector and industry.

Here are a few examples:

- Autonomous Vehicles: Self-driving cars and other vehicles rely on computer vision to navigate and make decisions on the road. Cameras, LIDAR, and radar sensors are used to capture images and other data about the car’s surroundings. Visual data is analyzed to guide the vehicle.

- Medical Diagnosis: Computer vision is used to analyze medical images, such as X-rays and MRIs, to detect abnormalities. Machine learning algorithms can learn to identify patterns in images associated with specific conditions, such as cancer.

- Retail: Computer vision is used to analyze customer behavior and improve in-store shopping experiences. For example, Amazon is constructing stores where you simply walk in, choose your products, and walk out. They plan to use computer vision to see what you’ve picked up, ID your face, and bill you to your account automatically.

- Security: Computer vision is used in security and law enforcement to identify potential threats and improve safety. For example, law enforcement can use facial recognition technology to identify known criminals or terrorists in crowds.

- Agriculture: Computer vision is used in agriculture to improve crop yields, monitor livestock and reduce waste. For example, drones equipped with cameras can be used to monitor agricultural land from above and detect early signs of disease or pest infestation.

- Online Search and Content Verification: Computer vision is used in reverse image search that help users find the original source or visually similar versions of an image online. By analyzing image features instead of relying on keywords, this tool assists in verifying authenticity, identifying products, and detecting copyright infringement.

Challenges and Limitations

While the field of computer vision has made significant progress in recent years, there are still many challenges and limitations to overcome.

1: Volume of Data

Complex models are extremely data-hungry. The more complex the model, the more data it needs.

Creating accurate training data is resource-intensive, and training models on large datasets is computationally expensive.

Obtaining labeled data can be time-consuming and expensive, particularly for specialized applications. For instance, human teams must work with domain specialists when labeling data for high-risk applications such as medical diagnostics.

Automated and semi-automated data labeling is helping alleviate this bottleneck, but replacing skilled human annotation teams is proving difficult.

2: Complexity of Visual Data

Another challenge of computer vision is the ever-changing complexity of the visual world.

The visual world is forever changing, which poses an issue when training models with data available right now.

For example, autonomous vehicles are trained on datasets containing street features contemporary at the time, but these change. For instance, there are now many more eScooters on the roads than 5 years ago.

To respond to changes in the visual world, complex CV models like those used in self-driving cars must combine supervised models with unsupervised models to extract new features from the environment.

3: Latency

Latency is one of the most pressing issues facing real-time AI models. We take our reaction times for granted, but they result from millions of years of complex evolution.

Visual data enters the eye before traveling down the optic nerve to the brain. Our brain then starts processing data and makes us conscious of it.

To build robots that respond to stimuli in real time like biological systems do, we need to build extremely low-latency models. Read more about this here.

Summary: Definitive Guide to Computer Vision in AI

Computer vision is a fascinating field of AI that has many practical applications, many of which have already changed lives.

Despite the field being only 50 years or so old, we’ve now built advanced technologies that can “see” and understand visual data in a similar fashion to humans, and at similar speeds.

While computer vision has made massive progress in recent years, there are still many barriers to overcome for it to fully realize its potential. However, at current rates of development, it’s only a matter of time.

The future of computer vision relies on high-quality training data, which specialist data providers like Aya Data provide. Contact us to discuss your next computer vision project.