Video annotation as a key subcategory of data annotation, widely used in training AI and machine learning models-especially those focused on computer vision. But what is video annotation, and how does it work in real-world AI systems?

In this guide, we’ll break down the basics of “video data annotation“, including its importance, methods, and best practices. Whether you’re new to video annotation for machine learning or looking to scale your annotation process, this article is designed to give you practical and actionable insight.

What is Video Annotation?

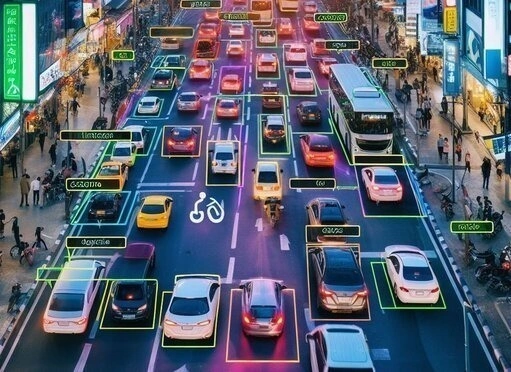

Video annotation is the process of labeling elements within video footage-such as objects, actions, or events-frame by frame or across segments. This labeled data helps machine learning models understand, interpret, and predict patterns in video content.

It’s essential for training systems in:

- Object detection and tracking

- Activity recognition

- Surveillance and security

- Autonomous vehicles

- Augmented and virtual reality

With accurate video annotations, AI systems can analyze movements, recognize behaviors, and learn from complex visual environments.

How Video Annotation Works

Video annotation can be:

- Manual: Done by trained data annotators using tools to apply bounding boxes, polygons, or keypoints.

- Automated: Supported by AI-assisted platforms that streamline the labeling process.

- Outsourced: Many companies choose video annotation outsourcing to scale faster with expert support.

Techniques include:

- Bounding boxes for object tracking

- Semantic segmentation for pixel-level labeling

- Temporal annotation to understand action sequences

- 3D annotation for depth and spatial context

Why Video Annotation is Important for Machine Learning?

Without properly labeled data, AI models can’t accurately interpret video’s. Video annotation for machine learning ensures that, your models are trained with high-quality, consistent inputs-leading to better performance and fewer errors in production.

Overall, video annotation is used to advance computer vision and AI technologies, enabling machines to comprehend and interact with visual content in a more meaningful way.

Video Annotation vs. Image Annotation: What’s the Difference?

While both video annotation and image annotation involve tagging visual data, they serve distinct purposes-and video annotation offers several unique advantages.

1. Contextual Awareness

Video annotation captures object interactions across multiple frames, offering deeper context than a single image. This helps AI models understand movement, behavior, and relationships over time-critical for dynamic scenes.

2. Frame Interpolation

By labeling objects in key frames, annotation tools can interpolate movement across untagged frames. This results in smoother, more accurate tracking of objects throughout a video.

3. Temporal Context

Unlike static images, video allows models to analyze an object’s past, present, and projected future states. This temporal understanding is essential for tasks like object tracking and behavior prediction.

4. Real-World Applications

Video annotation powers complex use cases such as:

- Activity recognition

- Surveillance systems

- Autonomous driving

- Augmented and virtual reality

These applications demand a deep understanding of motion and sequence-something image annotation alone can’t provide.

An Abundance of Information

In comparison to images, videos possess a more intricate data structure, enabling them to convey richer information per unit of data. For instance, a static image cannot indicate the direction of vehicle movement. Conversely, a video not only provides direction but also allows estimation of speed relative to other objects. Annotation tools facilitate the incorporation of this supplementary data into your dataset for ML model training.

Additionally, video data can leverage preceding frames to track obscured or partially hidden objects, a capability lost in static images.

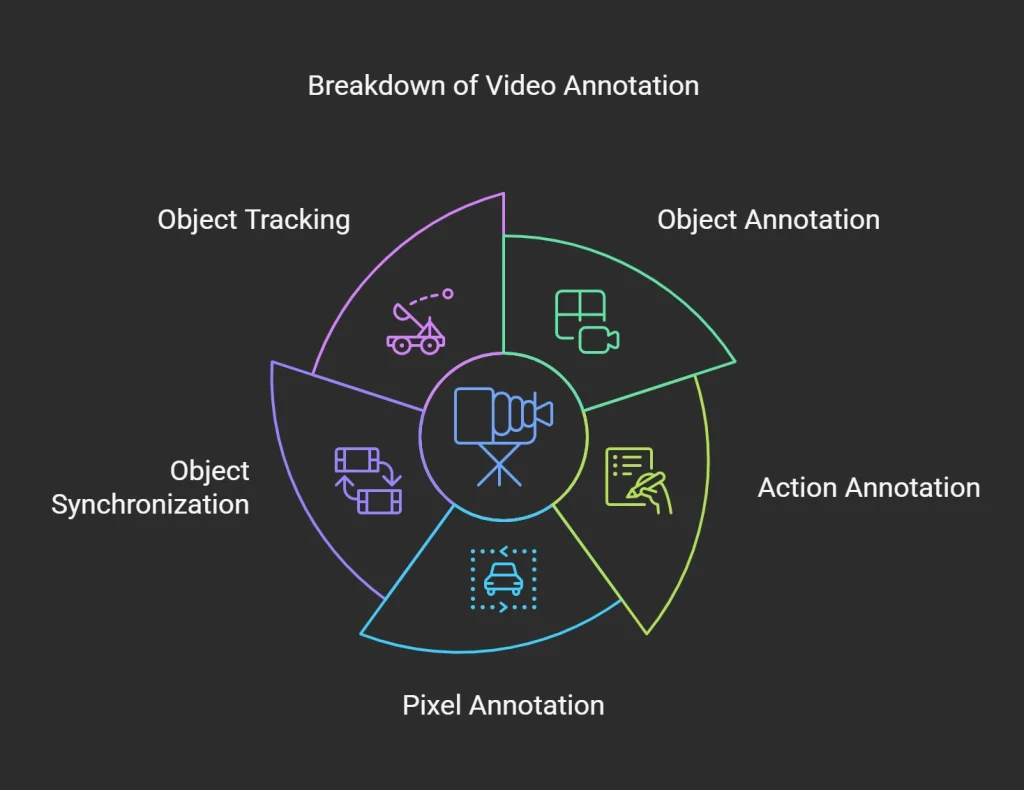

The Labeling Process

The labeling process in video annotation involves annotating various elements, such as objects, actions, and pixels, within video frames to provide valuable information for training computer vision models. However, video annotation presents additional challenges compared to image annotation due to the need for object synchronization and tracking between frames.

These applications demand a deep understanding of motion a sequence-something image annotation alone can’t provide.

Accurate video annotation requires more than just tagging objects, it demands precision, consistency, and contextual awareness. Annotators must carefully track objects across frames, maintaining consistency in position, size, and appearance as they move throughout the video. This calls for sharp attention to detail and a strong understanding of the scene’s context.

Equally important is the use of customized label structures and well-organized metadata. Clear labeling frameworks reduce the risk of misclassification and ensure machine learning models interpret the data correctly. Metadata-like timestamps and object attributes-adds an extra layer of context, helping models process sequences more intelligently.

Together, consistent tracking, structured labeling, and rich metadata form the foundation of high-quality video annotations that power reliable AI performance.

Accuracy

While both processes involve labeling and annotating objects, video annotation requires annotators to track and synchronize objects across frames, ensuring continuity and consistency throughout the video.

Video annotation allows for a more comprehensive understanding of object behavior and movements over time. By annotating objects across frames, annotators create a continuous narrative of object activity, reducing the possibility of errors and providing a holistic view of the video footage. This ensures that the labeled objects are accurately represented throughout the entire video sequence.

In summary, accuracy is crucial in video annotation due to the need for continuity and consistency across frames. Video annotation provides a more comprehensive understanding of object behavior and reduces the possibility of errors compared to image annotation.

The Pros of Video Annotation

The two most important advantages of video labeling boil down to data gathering and the temporal context videos provide.

Simplicity in Gathering Data

Rather than manually annotating every single frame in a video, annotation techniques such as keyframes and interpolation are used. These techniques involve annotating a few keyframes and then automatically generating annotations for the in-between frames.

This approach not only saves time and effort but also allows for the building of robust models with minimal annotation. By annotating keyframes and interpolating in-between frames, the model can learn to recognize and understand objects and actions in the video footage. This reduces the amount of manual annotation required and makes the annotation process more manageable.

The simplicity in data collection provided by video annotation is particularly beneficial in scenarios where there is a large volume of video data. Rather than manually annotating every frame, video annotation techniques allow annotators to focus on keyframes and let the model extrapolate the annotations for the remaining frames.

Temporal context

Temporal context provides machine learning (ML) models with valuable information about object movement and occlusion. Unlike image annotation, where each frame is treated independently, video annotation takes into account the temporal dimension of the data.

By considering multiple frames in sequence, video annotation allows ML models to understand how objects move and interact over time. This knowledge of object motion is essential for accurate object tracking, activity recognition, and action prediction tasks. Without temporal context, ML models might struggle to differentiate between different object instances or accurately predict future states.

Additionally, temporal context helps ML models deal with challenging scenarios such as occlusion, where objects are partially or completely hidden from view. By analyzing multiple frames, the model can infer occluded objects’ positions and trajectories, improving overall performance.

To further enhance network performance and handle temporal context effectively, video annotation techniques can incorporate temporal filters and Kalman filters. Temporal filters smooth out noise and inconsistencies in the annotation process, ensuring that the motion information is accurately represented. Kalman filters are used to estimate the state of objects based on previous observations, allowing ML models to make informed predictions even in the presence of noisy or incomplete data.

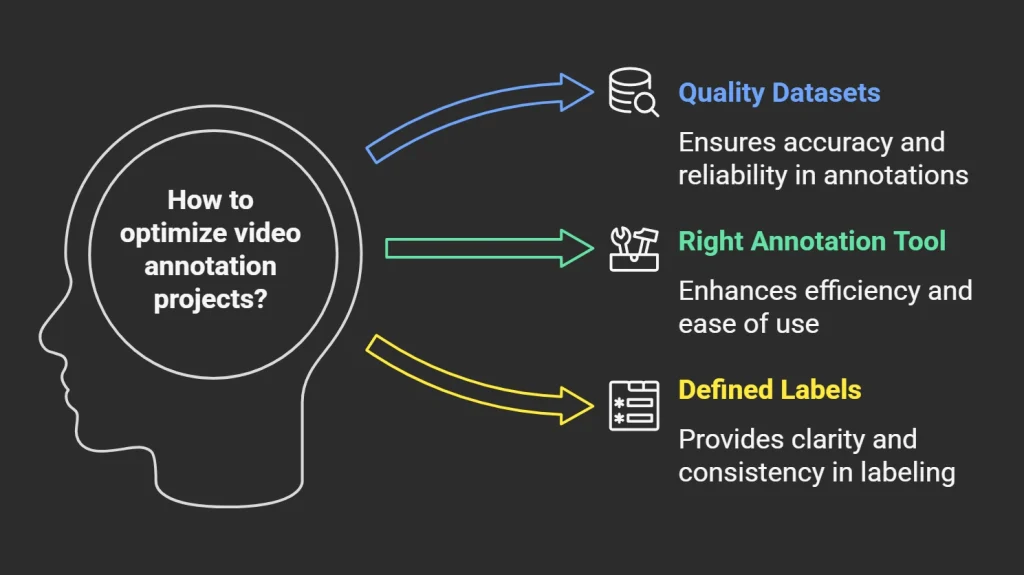

Video Annotation Best Practices

To ensure accurate and effective annotation, certain best practices should be followed. Read on as we outline the most important elements of a successful video annotation project.

Work with Quality Datasets

Ensuring you have a high-quality dataset at your disposal should be your first step because it will significantly impact the accuracy and reliability of the annotated results. Annotating videos with low-quality or duplicate data can lead to incorrect annotations, which can ultimately affect the performance of vision models or the identification of objects in video footage.

To maintain the quality of the dataset when working with annotation tools, it is recommended to opt for software that employs lossless frame compression. Lossless compression ensures that the dataset’s quality is not degraded during the annotation process. This is particularly important when dealing with large video files as it helps preserve the original details and maintains the integrity of the annotation.

Choose the Right Annotation Tool

A user-friendly and feature-rich video annotation software can greatly enhance the efficiency and accuracy of the annotation process.

One important feature to consider is auto-annotation. This feature uses AI algorithms to automatically generate annotation masks or labels, reducing the manual effort required for annotation. It saves time and ensures consistency across annotations.

The ability to automate repetitive annotation tasks can significantly speed up the annotation process and streamline workflows. This is especially beneficial when dealing with large-scale video datasets.

Finally, ease of use should also be considered. An annotation tool should have an intuitive interface and be easy to navigate. It is recommended to try out the tool before making a purchase decision to ensure it meets your specific requirements and fits seamlessly into your annotation workflow.

Define the Labels You Are Going to Use

Using the right labels in a machine learning project is essential in order to achieve accurate results. It is important for the annotators that are involved in the task to understand how the dataset is going to be used when training a ML model.

For example, if object detection is the goal then they need to correctly label objects utilizing bounding boxes of coordinates so that information can be accurately extracted from them. Similarly, if classification of an object is required then it’s important to define class labels and apply them ahead of time.

This will allow the labeling process to go more quickly and efficiently since it does not require additional annotation work after everything has already been labeled. Having a good understanding of how datasets are going to be used before annotating also helps prevent inconsistencies within a data set which can lead to unreliable results from machine learning.

It is vital for any machine learning project that proper labeling techniques and strategies be employed throughout the entire workflow in order for impactful results to be realized.

Keyframes and Interpolation

Keyframes and interpolation are important concepts in video annotation that help streamline the annotation process and ensure accurate and efficient labeling.

Keyframes can be used to identify important frames in a video that don’t require annotating the entire video. These frames serve as representative samples that capture the key information or changes in the video. By selecting keyframes strategically, annotators can minimize the amount of annotation needed while still capturing the essential aspects of the video.

To create pixel-perfect annotations based on these keyframes, interpolation is used. Interpolation is the process of automatically generating annotations for the frames between keyframes. It uses the information from the annotated keyframes to infer and assign labels to the intermediate frames. This technique saves time and effort by reducing the manual annotation required for every single frame.

While keyframes and interpolation provide efficiency, it is still crucial to plan and watch the entire footage before starting the annotation process. This ensures that important details and variations in the video are not missed, allowing for comprehensive and accurate annotations.

Outsourcing Data Annotation vs Doing it In-House

Outsourcing data annotation helps reduce costs by eliminating the need for tools, infrastructure, and hiring specialized staff-making it ideal for short-term projects or variable workloads.

In contrast, in-house annotation provides greater control, deeper alignment with business goals, and a better understanding of project-specific needs. It allows for higher flexibility, tighter quality control, and improved accuracy.

Ultimately, the right choice depends on your organization’s priorities. Consider factors like cost, timeline, expertise, and quality requirements to determine the best approach for your AI goals.

Frequently Asked Questions

What are the key challenges in video annotation?

Video annotation faces challenges like scalability due to high data volume, maintaining consistency across frames, dealing with dynamic content, subjective interpretations, ensuring data quality, and addressing privacy concerns.

What companies are using computer vision?

Tech giants like Google, Amazon, Microsoft and Apple, automotive companies such as Tesla and Ford, Data Annotation firms like Aya Data ,healthcare firms like IBM Watson Health, retail brands like Walmart, manufacturing companies like Foxconn and security providers like Honeywell use computer vision.

What is the difference between computer graphics and computer vision?

Computer graphics creates visual content from algorithms, while computer vision interprets existing visual information from the real world.

Is computer vision AI or ML?

Computer vision is a field of AI that often uses machine learning, especially deep learning, to process and understand visual data.