What is Semantic Segmentation for Computer Vision?

Semantic segmentation is a computer vision technique that uses deep learning(DL) to classify each pixel in an image according to its corresponding object or region. It’s one of three main types of “image segmentation” and plays a key role in helping computers interpret and understand visual scenes.

In its simplest terms, the objective is to assign a class label to each pixel in an image.

For example, if you’re labeling a cat against a plain background, semantic segmentation labels every single pixel of the cat as a “cat”.

Pixel-level labels are required for image segmentation tasks, which differs from object detection.

“Object detection” aims to locate objects within an image, predicting bounding boxes or polygons, whereas image segmentation aims to predict the boundaries between objects, predicting masks.

Semantic segmentation is the most common form of data annotation for segmentation tasks.

Foundations of Semantic Segmentation

Semantic segmentation is a fundamental task in computer vision that involves labeling each pixel of an image with a specific class. Unlike simple classification, which assigns a single label to an entire image, semantic segmentation provides a more detailed understanding of the visual content.

This is made possible through deep learning models-particularly convolutional neural networks (CNNs) that learn to detect patterns, shapes, and structures within images. By breaking down scenes into meaningful segments, semantic segmentation enables applications in autonomous driving, “medical imaging”, agriculture and more.

For example, with instance segmentation, you could have a class “human”, and label every instance of humans as human 1, human 2, etc. In semantic segmentation, humans would simply be labeled as humans – there’s no distinction between instances.

For more information on different approaches to computer vision, read this article.

Semantic Segmentation Example

Aya Data used semantic segmentation to label maize leaves for a maize disease identification model – read more in the case study.

The goal was to assist farmers in identifying diseases and recommending treatment options.

To train the model, Aya Data “labeled digital photographs of real maize diseases to build a training dataset”. Local agronomists helped us identify specific diseases, enabling us to label them accurately.

The dataset consisted of 5000 high-quality images labeled using semantic segmentation. Semantic segmentation allowed the identification of diseased leaves and class labeling of diseases. See an example of a labeled leaf below.

The resulting dataset facilitated the training of a computer vision model capable of detecting common maize diseases with 95% accuracy.

Farmers could access the model through an app, which provides information on the disease type, severity, and treatment options.

Semantic segmentation is ideal here, as it enabled us to label the entire leaf with the disease class. Bounding boxes or polygons wouldn’t have been as effective, and there was no need for instance segmentation as each image contains only one instance of the leaf.

Methods and Techniques for Semantic Segmentation

Labeling images for semantic segmentation typically use graph-based methods, region merging, and clustering.

This sounds complex, but grouping distinctive shapes at the pixel-level using modern labeling tools is relatively straightforward.

If you’ve used Photoshop or another image editor, the process is similar to using the “Magic Wand” tool that automatically finds the boundary between shapes. Then, each pixel within that pixel mask is labeled.

The “magic wand” tool automatically finds the boundaries between image features

Automatic labeling techniques have also been used to segment images based on features such as color, texture, and shape.

Challenges of Semantic Segmentation

In terms of data annotation, semantic segmentation is more time-consuming than other methods like bounding boxes.

Some computer vision applications require large-scale, diverse, well-annotated datasets with high-quality, pixel-level annotations – which is labor-intensive. Class imbalance and the presence of rare classes complicate the process further.

Semantic Segmentation Compared to Other Data Labeling Methods

Semantic segmentation is an extremely useful method for labeling images for supervised computer vision projects.

While the fundamental goal is to create data that models can understand, it differs from other labeling methods, such as image classification, object detection, and instance segmentation.

Here’s a comparison of these common image labeling techniques:

1: Image Classification

In image classification, the goal is to assign a single label to the entire image, representing its primary content or category. For example, an image with a cat against a backdrop of various household items would simply be labeled as a “cat”, if the cat was the feature relevant to the model.

This technique does not provide any information about the location or shape of the objects within the image. It’s the simplest form of image labeling.

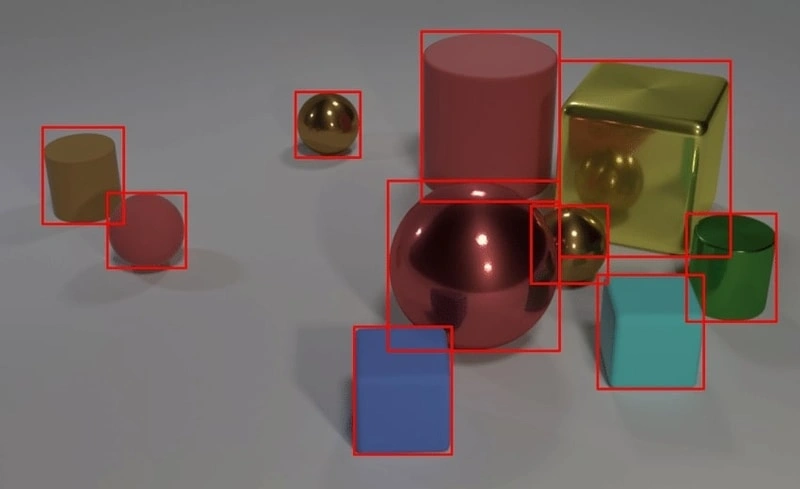

2: Object Detection

Simple object detection aims to locate and classify multiple objects within an image. It typically uses bounding boxes to enclose each identified object and label it.

While object detection provides information about the location and classification of objects, it does not precisely outline their shapes or separate overlapping instances.

For non-linear or complex shapes, bounding boxes can be replaced by polygons or key points.

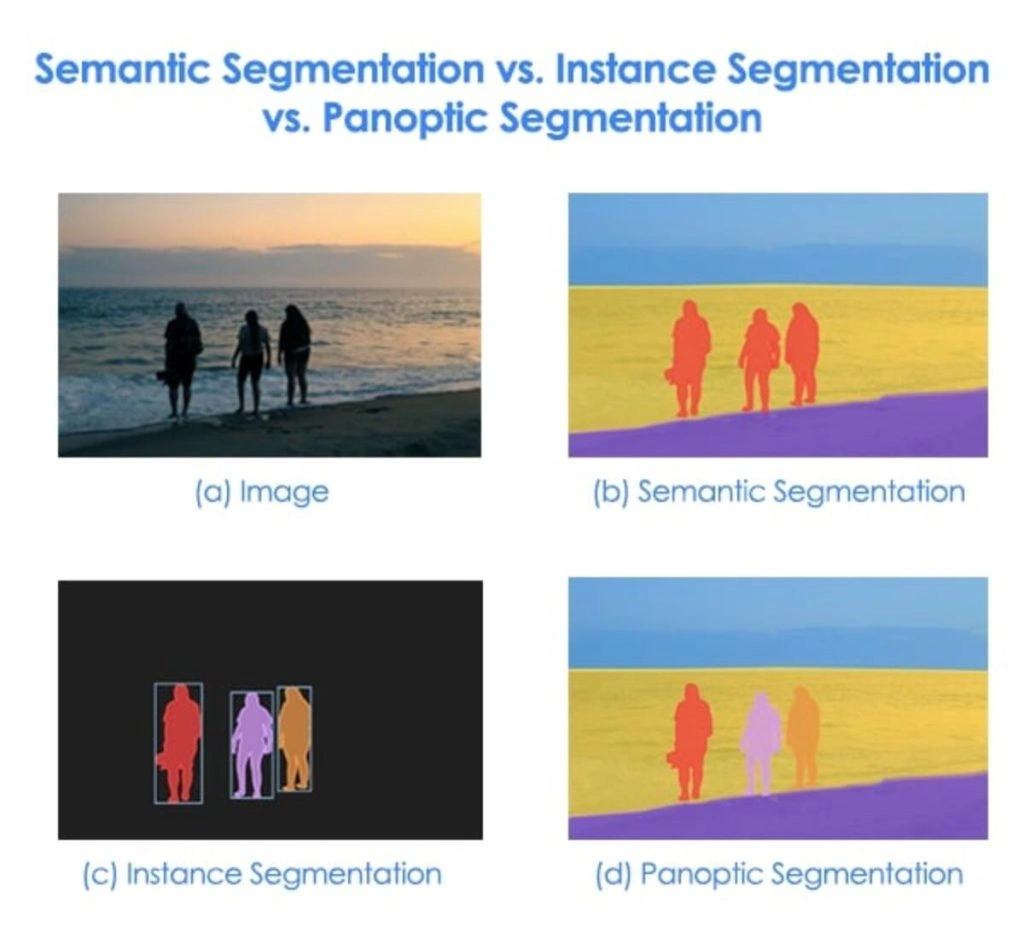

3: Semantic Segmentation

As mentioned, semantic segmentation involves assigning a class label to each pixel in the image, providing a detailed understanding of object shapes and locations.

This method generates a pixel-level mask for each object class, but it does not distinguish between separate instances of the same class.

For example, suppose multiple cars of the same class appear in an image. In that case, semantic segmentation will label all their pixels with the same class, making it impossible to differentiate individual cars.

Semantic segmentation is ideal for accurately labeling complex or non-linear shapes.

4: Instance Segmentation

Instance segmentation is similar to semantic segmentation in that it labels individual pixels.

However, instance segmentation goes a step further by differentiating between separate instances of the same class. For example, if there are multiple cars in an image, instance segmentation will assign different labels to each car, even if they are of the same class.

5: Panoptic Segmentation

Panoptic segmentation aims to assign a class label to every pixel in an image while also distinguishing between separate instances of the same class.

This method provides a unified approach to segmentation, where both the “stuff” classes (e.g., sidewalk, sky, grass, water) and the “thing” classes (e.g., car, cat, person, dog) are labeled at the pixel level, with individual instances being identified for “thing” classes.

3: Semantic Segmentation

As mentioned, semantic segmentation involves assigning a class label to each pixel in the image, providing a detailed understanding of object shapes and locations.

This method generates a pixel-level mask for each object class, but it does not distinguish between separate instances of the same class.

For example, suppose multiple cars of the same class appear in an image. In that case, semantic segmentation will label all their pixels with the same class, making it impossible to differentiate individual cars.

Semantic segmentation is ideal for accurately labeling complex or non-linear shapes.

4: Instance Segmentation

Instance segmentation is similar to semantic segmentation in that it labels individual pixels.

However, instance segmentation goes a step further by differentiating between separate instances of the same class. For example, if there are multiple cars in an image, instance segmentation will assign different labels to each car, even if they are of the same class.

5: Panoptic Segmentation

Panoptic segmentation aims to assign a class label to every pixel in an image while also distinguishing between separate instances of the same class.

This method provides a unified approach to segmentation, where both the “stuff” classes (e.g., sidewalk, sky, grass, water) and the “thing” classes (e.g., car, cat, person, dog) are labeled at the pixel level, with individual instances being identified for “thing” classes.

Applications of Semantic Segmentation in Computer Vision

Semantic segmentation has numerous applications across various CV domains:

- Medical Imaging: In medical imaging, semantic segmentation identifies tissues, lesions, evidence of cancer, etc.

- Autonomous Vehicles: Understanding roads and their various components, features, and surrounding environments is pivotal for AVs. Semantic segmentation identifies roads, pedestrians, vehicles, and other objects vital for the supervised training stack.

- Robotics: Robots require a deep understanding of their environment to navigate the physical world. Semantic segmentation aids in environment perception, object manipulation, and other essential functions.

In essence, any supervised CV task that requires accurate pixel masks without needing to segment instances can apply semantic segmentation.

Summary: Semantic Segmentation for Computer Vision Projects Explained

Semantic segmentation enables computers to understand and interpret complex visual scenes. Semantic segmentation is key to training CV models for the purposes of “autonomous vehicles”, “medical imaging”, etc. As Aya Data has demonstrated, semantic segmentation has novel and creative applications, e.g., in the training of a maize disease detection model.

Contact Aya Data for your data labeling needs.

Frequently Asked Questions

What is the role of human annotators in semantic annotation for computer vision projects?

Human annotators play a critical role in semantic annotation for computer vision projects. They are responsible for accurately labeling each pixel in an image with the corresponding class or category it belongs to. Human expertise uses automated tools to simplify the process. Domain specialists and experts can also contribute, ensuring the dataset is accurate and appropriate for the model.

Why is semantic annotation important for computer vision projects?

Semantic annotation is essential for computer vision projects as it provides detailed information about the objects and their boundaries in an image, enabling the model to understand and recognize complex scenes. Semantic annotation allows the model to accurately distinguish between different objects and their shapes by assigning a class label to each pixel.

How does semantic annotation differ from other forms of image annotation?

Semantic annotation focuses on assigning a class label to each pixel in an image, resulting in a more detailed understanding of object shapes and locations.

In contrast, other forms of image annotation, such as image classification, object detection, instance segmentation, and panoptic segmentation, have different objectives and levels of granularity. For example, semantic segmentation is more advanced than simple bounding boxes for object detection but less sophisticated than instance and panoptic segmentation